Whatever the end product, all designers have specific tasks to solve and their solutions will be influenced by the resources that are available and the constraints of cost, time, physical size and technology choice. At the heart of many a good product, the ubiquitous microcontroller often has a crucial influence on system power design and particularly in a brave new world that’s concerned with energy efficiency, users are entitled to demand a greater service from them. The way microcontrollers are built and operate needs to evolve dramatically if it is to achieve the best possible performance from limited battery resources.

Bearing in mind that the cost of even a typical coin cell battery can be relatively high compared to that of a microcontroller, there are obvious advantages in designing a system that offers the best possible energy efficiency. It can enable designers to reduce the cost and size of a battery. Secondly, it can enable designers to significantly extend the lifetime of a battery, consequently reducing the frequency of battery replacement and for certain products the frequency, cost and ‘carbon footprint’ associated with product maintenance call-outs.

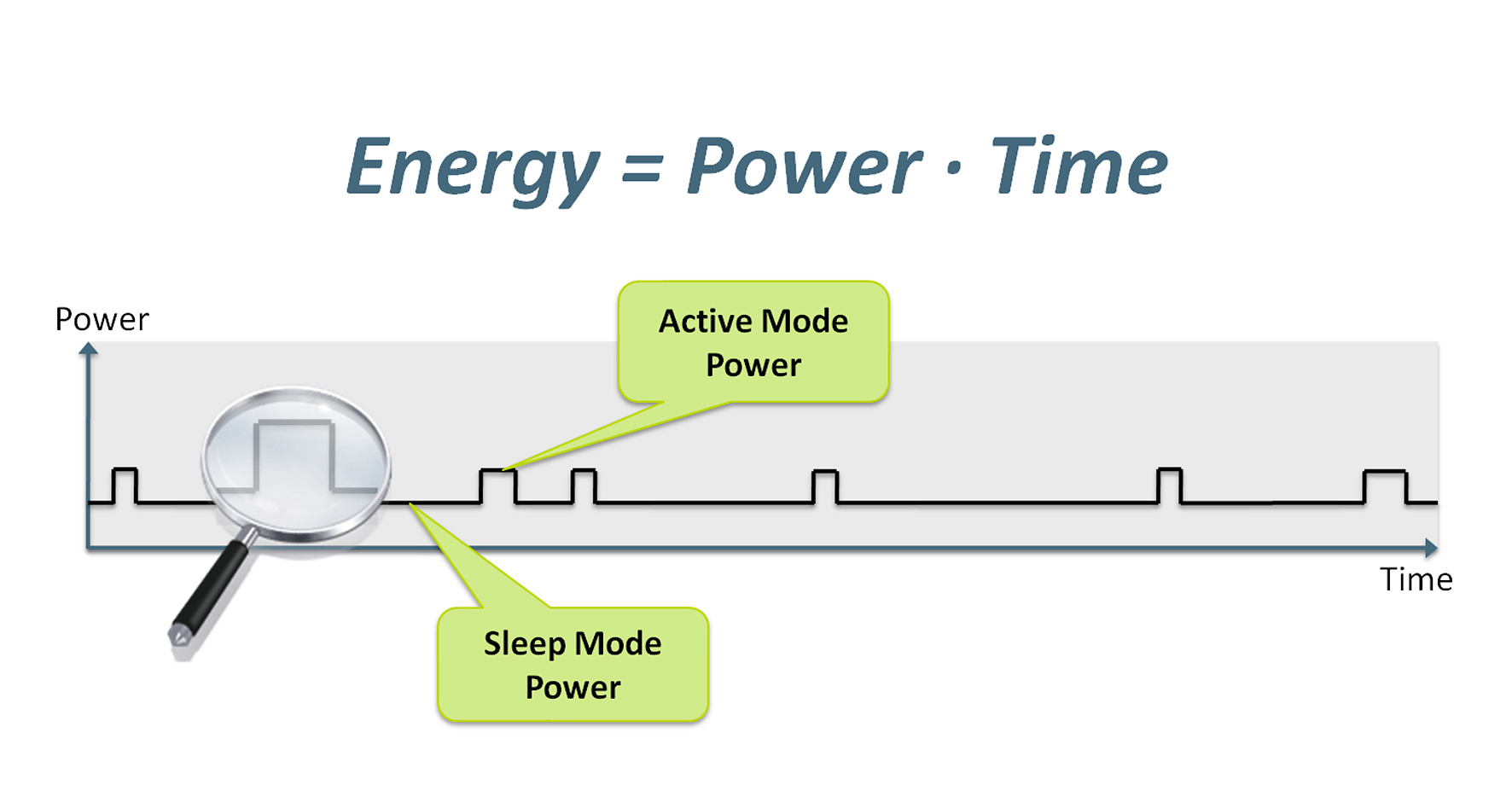

Microcontrollers, like many other breeds of electronic components, are these days very keen to stress their ‘ultra low power’ credentials, which is perfectly fine and appropriate where a device’s dynamic performance merits; however, with a finite amount of charge available from a battery cell, it is how a microcontroller uses energy (i.e. power over the full extent of time), that needs to be more closely borne in mind.

Microcontroller applications improve their energy efficiency by operating in several states – most notably active and sleep modes that consume different amounts of energy.

Product designers need to minimize the product of current and time over all phases of microcontroller operation, throughout both active and sleep periods (Figure 1). Not only does every microamp count, but so does every microsecond that every function takes. This relationship between amperage and time makes the comparison of 8-, and 16-bit microcontrollers with 32-bit microcontrollers less straightforward. Considering alone their current consumption characteristics in a deep-sleep mode, it is easy to understand why 8-bit or 16-bit microcontrollers have been in an attractive position in energy sensitive applications, where microcontroller duty cycles can be very low. A microcontroller may after all stay in a deep sleep state for perhaps 99% of the time.

However, if product designers are concerned with every microamp and microsecond every function takes, then using a 32-bit microcontroller should be being considered for even in the ‘simplest’ of product designs. The higher performance of 32-bit processors enables the microcontroller to finish tasks quicker so that they can spend more time in the low-power sleep modes, which lowers overall energy consumption. 32-bit microcontrollers are therefore not necessarily ‘application overkill’.

More than that though, even simple operations on 8-bit or 16-bit variables can need the services of a 32-bit processor if system energy usage goals are to be achieved. By harnessing the full array of low-power design techniques available today, 32-bit cores can offer a variety of low-power modes with rapid wake-up times that areon par with 8-bit microcontrollers.

There is a common misconception that switching from an 8-bit microcontroller to a 32-bit microcontroller will result in bigger code size, which directly affects the cost and power consumption of end products. This is borne of the fact that many people have the impression that 8-bit microcontrollers use 8-bit instructions and 32-bit microcontrollers use 32-bit instructions. In reality, many instructions in 8-bit microcontrollers are 16-bit or 24-bit in length.

The ARM Cortex-M3 and Cortex-M0 processors are based on the Thumb-2 technology, which provides excellent code density. Thumb-2 microcontrollers have 16-bit as well as 32-bit instructions, with the 32-bit instruction functionality a superset of the 16-bit version. Typical output from a C compiler gives 90% 16-bit instructions. The 32-bit version would only be used when the operation cannot be performed with a 16-bit instruction. As a result, most of the instructions in an ARM Cortex microcontroller program are 16-bits. That’s smaller than many of the instructions in 8-bit microcontrollers, typically providing less compiled code from a 32-bit processor than 8- or 16-bit microcontrollers.

The second part in this three part series looks deeper at the issues around microcontroller sleep modes.