Innovative multimedia applications – think of multi-camera surveillance or multipoint videoconferencing – are demanding both in processing power and bandwidth requirements. Moreover, a video processing workload is highly dynamic and subject to stringent real-time constraints. To process these types of applications, the trend is to use commodity hardware, because it provides higher flexibility and reduces the hardware cost. This hardware is often heterogeneous, a mix of central processing units (CPUs), graphic processing units (GPUs) and digital signal processors (DSPs).

But implementing these multimedia applications efficiently onto one or more heterogeneous processors is a challenge, especially if you also want to take into account the highly dynamic workload. State-of-the-art solutions tackle this challenge through fixed assignments of specific tasks to types of processors. However, such static assignments lack the flexibility to adapt to workload or resource variations and often lead to poor efficiency and over-dimensioning.

Another strategy would be to implement dynamic task assignment. To prove that this is feasible, we have implemented middleware that performs both run-time monitoring of workloads and resources, and runtime task assignment onto and between multiple heterogeneous processors. As platforms, we used the NVidia Quadro FX3700 and dual Intel quad core Xeon processors.

Load-balancing between the cores of a device

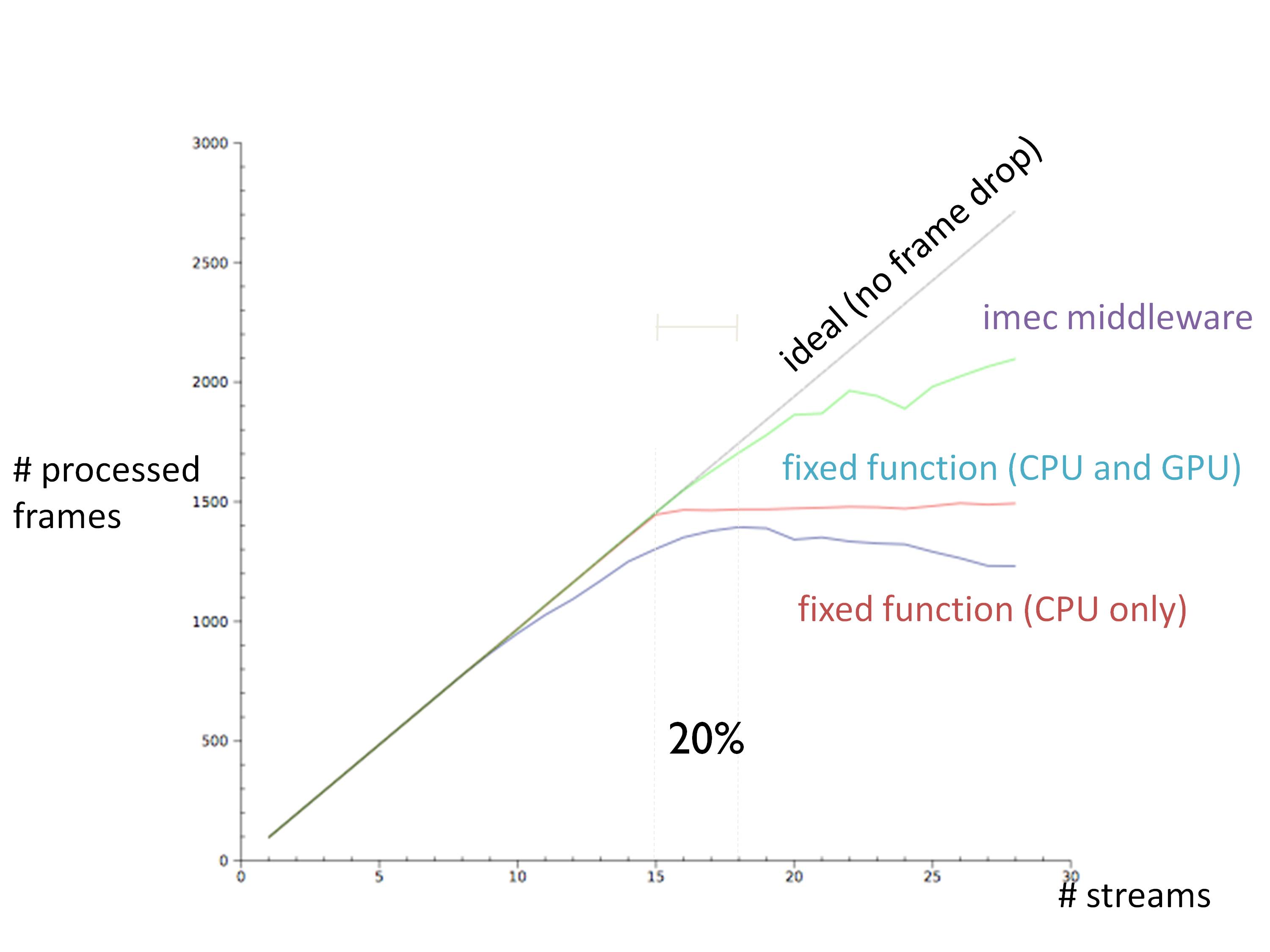

Our experiment consists of running multiple pipelines where MPEG-4 decoding, frame conversion and AVC video encoding are serialized tasks. From all these tasks, the most demanding motion estimation task, part of video encoding, can be run either on a CPU or it can be CUDA-accelerated on the GPU. Figure 1 compares our experimental runtime assignment strategy with two static assignment strategies that mimic the behavior of state-of-the-art OS-based systems.

The first static assignment considered consists of letting the operating system assign all tasks onto the CPU cores. The second one assigns all CUDA-accelerated tasks on the GPU while the remaining tasks are scheduled on the CPU cores. We can see how the latter one, enabling GPU-implementable versions of the most demanding tasks, increases the number of streams that can be processed from 10 to 15. However at this point, the GPU becomes the bottleneck and it limits the number of processed frames.

The last, dynamic, strategy overcomes both CPU and GPU limitations and bottlenecks by finding at runtime an optimal balance between CPU and GPU assignments. By doing so, an increased throughput of 20% is achieved in comparison with fixed assignments to GPU and CPU. This way, the efficiency and flexibility of the available hardware is increased while the overhead remains marginal, around 0.05% of the total execution time.

From the device to the cloud

Load balancing within the device is a first step that is necessary to maximize the device’s capabilities and to cope with demanding and variable workloads. But to overcome the limited processing capacity of a device, a second step is needed: load balancing at the system level. Only this will allow exploiting the potential of a highly connected environment where resources from other devices can be shared.

In addition, today’s applications tend to become more complex and demanding in both processing and bandwidth terms. On top of this, users keep demanding lighter and portable multifunctional devices where longer battery duration is desirable. Naturally, this poses serious challenges for these devices to meet the processing power required by many applications.

The way to solve this is by balancing the load between multiple devices, by offloading tasks from overloaded or processing-constrained devices to more capable ones that can process these tasks remotely. This is linked to “thin client” and “cloud computing” concepts where the limited processing resources on a device are virtually expanded by shifting processing tasks to other devices in the same network.

As an example, think of home networks or local area networks through which multiple heterogeneous devices are connected. Some of these devices are light portable devices such as I-phones and PDAs with limited processing capabilities and battery, while others are capable of higher processing such as media centers or desktops at home, or other processing nodes/servers in the network infrastructure.

One consequence from migrating tasks from lighter devices to more capable ones is that the communication and throughput between devices increases. In particular, in the case of video applications, where video is remotely decoded and transmitted, the bandwidth demand can be very high. Fortunately, upcoming wireless technologies are providing increasingly high bandwidth and connectivity enabling load balancing. This is the case of LTE femto cells where up to 100 Mbps downlink are available, or wireless HD communications systems in the 60GHz range where even 25Gbps are promised.

However, meeting the bandwidth needs is only one of the many challenges posed. Achieving efficient and flexible load balancing in the network also requires a high degree of cooperation and intelligence from devices in the network. This implies not only processing capabilities at the network side but also monitoring, decision making, and signaling capabilities.

Experimental work at imec has shown that, as challenging as it may sound, the future in which all devices in the network efficiently communicate and share resources is much closer than we think.

Tags: Load Balancing