I have put Embedded Insights into archive mode because it continues to receive a substantial number of visits each month. Please visit Encore Info to see our semiconductor research reports. Our first report is available now and is titled “Connected/Smart Device Semiconductor Forecast 2011-2017.”

Eclipse and NetBeans replacing embedded IDEs (part 3)

Tuesday, June 12th, 2012 by Robert CravottaIn parts 1 and 2 of this series we identified the Eclipse and NetBeans open source IDE platforms as targets for embedded development tools; the difference in how embedded developers are impacted differently from embedded tool developers; how both platforms were initially developed without any focus on the needs of embedded developers, and we explored the reasons embedded tool developers are considering and migrating their development tools to an open source IDE platform.

Both the NetBeans and Eclipse projects began as ava development environments and both evolved into platforms that support a wider array of software products. Both platforms have been actively supported and evolving open source projects that have competed and coexisted together for the past decade and this has led to a level a parity between the two platforms. From the perspective of a tool developer, applications are built the same way on either platform – the difference is in the specific terminology and tools. For example, NetBeans uses modules while Eclipse uses plugins. Both platforms support extension registries with extension points to contribute extensions to the core system. Both IDE platforms require a JRE (Java Runtime Environment) to be installed and running on the host system. Because both IDEs run on a Java Virtual Machine, they are both able to target a variety of operating systems and both platforms are tested against a similar set of operating systems including Windows, Linux (e.g. Red Hat, Ubuntu), Sun Solaris, Macintosh OS, and others.

From the perspective of an embedded developer using a development toolset based on either platform does not require the embedded developer to even know which platform the tool is based on. Each downloadable toolset we tested was only available as a single install package that included all of the necessary core components and needed plugins or modules. By packaging the IDE in a controlled install package (as opposed to providing a set of modules or plugins to add to a set of core IDE versions), the tool developer hides from the embedded developer the complexity inherent in downloading or updating the core IDE along with all of the necessary support plugins or modules. As there is only a single NetBeans-based development toolset at this time, there was no opportunity to compare it with other similar embedded development tools based on the same platform, but the look and feel for the installation of the NetBeans-based toolset was similar to the Eclipse-based tools.

During the process of installing multiple Eclipse-based development toolsets, multiple instances of the underlying Eclipse IDE runtime were installed on the workstation. Each instance appears to act independently of the others such that different settings for one tool did not change the settings in another tool that was based on the same platform. In fact, each development tool was based on a different version of the platform. There was no obvious method for updating the IDE platform underlying the embedded toolset, but this makes sense as each tool provider needs to be able to control the variability of the platform so as to be able to provide competent support across a limited range of configuration.

Another reason for bundling the core components with the vendor specific extensions is as a consequence of the tool developer making changes to some of the standard components of the platform. For some toolsets, the tool developers chose to modify some of the standard components to improve the usability of the tools, most notably those for debugging an embedded target. While a number of tool developers indicate that they have future plans on making their tools available as plugins or modules, it is not clear to us that supporting the explosion in configurations of core IDE, host system, target processor, and plugin versions would make that a practical choice as it is impossible to force all embedded developers to use the latest version of the development tools.

Considerations

There are three system-level considerations consistently identified by tool developers for choosing either Eclipse or NetBeans for embedded development tools beyond a feature by feature comparison of the two platforms. One system-level consideration is whether the tool provider’s third party tool partners are using the same platform. By providing the tools on the same platform, each of the tools within the development ecosystem gain the integration benefits of using a common infrastructure. The Eclipse platform has a seven year head start over NetBeans in supporting embedded development tools, and the Eclipse Foundation claims that there are over 50 embedded companies using the platform for their development tools. On the other hand, if a single company is providing a complete tool chain for their silicon products or they have coordinated with their tool partners to use NetBeans, then they have the opportunity to rely on the platform that is reported to be easier to learn and to use.

NetBeans is generally acknowledged within the user community to be more focused and easier to learn; in contrast Eclipse is considered a general platform that enables it to better support a wider range of projects. It is a classic simple ease-of-use versus complex flexibility trade-off. Providing complete install packages helps mitigate the configuration complexity for both platforms. The differences in the file, menu, and presentation architectures are open to debate as to whether one is better than the other or they are just different. In any case, there is a learning curve for adopting either platform. This difference between focused and jack-of-all-trades projects plays to the third system level consideration of how will new features work their way into future versions of the platform.

Eclipse has a heterogeneous embedded community that consists of many companies with differing views on how the platform should evolve forward. As a result, ideas and software come from a wider variety of embedded sources into the Eclipse platform, and there will be conflicts in similar approaches that must be resolved. In contrast, by being an early embedded adopter for NetBeans, Microchip gains the benefits of using a more focused IDE platform while providing a dominant voice, even if only temporarily as other tool developers adopt the platform, for establishing priorities for embedded features that should be integrated into the core platform. A trade-off for being an early embedded adopter though is that new innovations for the NetBeans platform will come from a much smaller group of companies than is available for the Eclipse platform.

An important outcome of embedded development tools leveraging either of the open source IDE platforms is that embedded developers have a greater chance to much sooner be able to take advantage of successful new innovations created for application developers. For example, the adoption of IDEs for embedded development tools lagged the application development world by ten years or more. A reason for this lag is that the embedded tool market is greatly fragmented – especially when compared to the application market. Whereas the application market has a handful of operating systems and dozens of processor targets to contend with, the embedded market is supported by over 80 different processor vendors, each with a range of processor targets that optimize and trade-off different performance constraints.

The small audience for each of a wide range of application specific and optimized embedded processors eliminates the practicality of investing precious engineering resources to incorporate evolving IDE functions and features in a proprietary platform. Instead, the open source platform approach allows the embedded development tools to more easily leverage and deliver new features without redirecting the engineering resources needed to improve specific tool features that address the real-time and variable environmental issues that makes developing embedded systems so different from application software. Using an open source platform allows a tools group to avoid expending resources on emerging IDE features and focus more of their resources on creating differentiating engineering features that better target the needs of embedded developers and set their toolset and silicon apart from the rest.

Eclipse and NetBeans replacing embedded IDEs (part 2)

Thursday, May 17th, 2012 by Robert CravottaIn part 1 of this series we identified the Eclipse and NetBeans open source IDE platforms as targets for embedded development tools. The choice of using an open source IDE platform affects embedded developers differently than the tool developers. Both platforms were initially developed without any focus on the needs of embedded developers, and only over the past few years have they been evolving to better support the needs of embedded developers.

Many embedded tool developers are choosing to migrate their embedded development toolset to an open source IDE platform for a number of reasons. Maintaining an up-to-date IDE with the latest ideas, innovations, and features requires continuous effort from the tool development team. In contrast to maintaining a proprietary IDE, adopting an open source IDE platform enables the tool developers to leverage the ideas and effort of the community and take advantage of advances in IDE features much sooner and without incurring the full risk of experimenting with new features in their own toolsets. Both the Eclipse and NetBeans platforms deliver regular releases that enable tool developers to more easily take advantage of the newest features in the platform architecture.

This is not to say that using the open source platform is a free ride. For example, more than a year has passed between the time the public was told that MPLAB X IDE would be NetBeans-based and the production version of the development suite was released. Additionally, embedded development tools based on open source platforms typically lag the latest release because the tool developers need to address any issues the platform update might inflict on their toolset. In other words, it is up to the tool developers to shield the embedded developers from the migration issues from one release version of the platform to another. The experience of some tool developers to complete a basic release migration and certification can take about a month for each release. This is significantly less time that it would take to build and certify a new capability into a proprietary IDE.

Illustrating a challenge facing embedded tool developers that migrate to an open source IDE platform, Freescale announced in June 2008 that its code warrior development environment would be leveraging the Eclipse platform; three years later the company still offers classic and Eclipse-based IDEs for their processors. Embedded tool providers, as illustrated in this case, may still need to incur support costs for the legacy tools for years, especially if those tools were involved in systems that require certifications.

Before migrating to an open source IDE platform, embedded development tools typically only support development on a single platform, such as a Windows host. Migrating to Eclipse or NetBeans enables tool developers to support the toolset on a variety of hosts because both platforms operate on a Java Virtual Machine which enables them to more easily target different operating systems. For example, Microchip’s MPLAB IDE v8 runs as a 32-bit application on Windows, but MPLAB X IDE is available on Windows, Linux, or MAC 10.x hosts. However, supporting multiple hosts incurs additional complexity for tool support and just because the platform can support an operating system does not mean that a tool provider will provide that support. For example, several companies with Eclipse-based tools support only Windows and Linux hosts even though the platform itself supports Macintosh hosts; these tool developers are currently not seeing sufficient demand for Macintosh support to warrant providing it.

A key consideration for tool developers choosing an open source IDE platform is how it can facilitate integrating tools with third party partners. For example, being able to cleanly integrate with third party tools is especially valuable to silicon providers working with RTOS partners. By using a common platform, it is simpler for tool partners to integrate their tools without the prior and tight interface coupling that is required when using a proprietary IDE. In fact, the common platform approach has allowed tools that were never explicitly targeted for integration to be used together. An example of an early success of serendipitous integration occurred between version control systems and editing, debugging, and profiling tools.

Simplifying the trade-offs between choosing to migrate to one or the other open source IDE platform, from the perspective of an embedded developer using a development toolset based on either platform does not require the embedded developer to even know which platform the tool is based on. Each downloadable toolset we tested was only available as a single install package that included all of the necessary core components and needed plugins or modules. By packaging the IDE in a controlled install package (as opposed to providing a set of modules or plugins to add to a set of core IDE versions), the tool developer hides from the embedded developer the complexity inherent in downloading or updating the core IDE along with all of the necessary support plugins or modules. As there is only a single NetBeans-based development toolset at this time, there was no opportunity to compare it with other similar embedded development tools based on the same platform, but the look and feel for the installation of the NetBeans-based toolset was similar to the Eclipse-based tools.

Adopting an open source IDE platform also provides tool developers the opportunity to directly participate with the platform development community by specifying and contributing to those initiatives that are appropriate for embedded designers but that are not necessarily even on the radar for the core group (Java applications) for these platforms. The need for this type of input is apparent when one understands that the history of both platforms are based on the needs of Java applications which can be alien to requirements of embedded developers; both platforms are still evolving to better address embedded development requirements.

In part 3 (final) of this series we will highlight the similarities and considerations that an embedded tool developer should address when choosing which open source IDE platform to migrate their legacy embedded development tools to.

Eclipse and NetBeans replacing embedded IDEs (part 1)

Wednesday, April 25th, 2012 by Robert CravottaThe recent release of the production version of Microchip’s MPLAB X integrated development tool suite continues the trend of embedded development tools adopting an open source platform to develop and manage an IDE (integrated development environment) – with a notable exception – Microchip’s MPLAB X is based on NetBeans rather than Eclipse. NetBeans and Eclipse are both open source projects that include tools, frameworks, and components for platforms and tools supporting software development. For the past seven years only the Eclipse IDE platform has been publicly adopted by embedded tool providers; with the release of MPLAB X, Microchip is arguably the first embedded microcontroller tool provider to publicly adopt NetBeans to date (PMC Sierra uses a NetBeans-based tool that was developed internally for validation tests).

Prior to the existence of either the Eclipse or NetBeans open source IDE platforms, embedded development tool providers offered command line tools and their own home-built IDEs for use by OEM embedded developers. During the late 1990’s and early 2000’s, despite the fact that proprietary IDEs had been available for workstation and desktop software development for already a decade, these home-built IDEs provided a much needed productivity boost and simplified the complexity of learning a set of command line tools for embedded developers. The definition of a full featured IDE evolved from being just a shell environment that integrated and automated the tool flow of the software development tool chain into an environment that also provided on-demand capabilities such as programming language-specific syntax highlighting, built-in programming references accessible from within the editing environment, application-based frameworks or templates, as well as the ability to quickly switch between editing and debugging code in a cross target environment.

It soon became essential for any broadly used embedded development toolset to include a full featured IDE, and this represented a significant challenge to the tool developers because the engineering costs to build and maintain the evolving complexities of contemporary IDE functions continued to grow without an offsetting increase in differentiation from other tool offerings. The availability of open source IDE platforms provides an opportunity to leverage the latest concepts in IDEs by leveraging the efforts of many tools developers and avoiding committing a growing percentage of the tool development team’s effort to IDE innovations. This is especially important for embedded development tool chains because they are usually applicable to a small target audience and do not enjoy the amortization opportunities that broadly adopted development tools that target workstations or desktops enjoy.

The choice to use an open source IDE platform for embedded development tools impacts two groups of developers in different ways. One group consists of tool developers that make the development tools, and the other group consists of embedded developers that use the development tools.

The way embedded or end-system developers use development tools based on either the NetBeans or Eclipse open source IDE platforms differs significantly from the way tool developers do because rather than targeting the IDE platform, embedded developers are using the tools built on the platform to build cross platform systems that target embedded processors (such as microcontrollers and DSPs). Also, it is unlikely embedded developers will ever host the open source platform on a target embedded system (in contrast to a mobile application) while the tool developers are directly target their chosen open source platform on a development workstation. This difference in usage may explain why embedded developers, based on an informal survey about open source development tools, tell us they are more concerned with the speed, functionality, and ease-of-use of the toolset rather than the name of the underlying platform.

In contrast, the tool developers are affected most directly by the choice to use an open source IDE platform because the tool environment they are creating actually runs on the IDE platform – which is itself running on a Java Runtime Environment. They must intimately understand and work with the architecture and components of the open source platform they are working with. In this case, both the NetBeans and Eclipse platforms share a lot of similarities in that they: provide a framework for desktop application developers, offer a large number of similar features out-of-the-box, and provide a rich set of APIs accompanied by tutorials, FAQs, and books. The tool developers are also responsible for deciding how to present and deliver embedded development concepts, including those that are not ideally addressed in the chosen open source platform architecture; these kinds of mismatches can occur because the architecture of both of these platforms were originally developed with the needs of Java application developers in mind rather than embedded developers.

Understanding the background of both the NetBeans and Eclipse platforms exposes how each platform has reached its current state and how they are evolving to be able to more appropriately support embedded development. What has become NetBeans began in the Czech Republic in 1996 as a student project to build a Delphi-like Java IDE in Java. The project attracted enough interest that the group of students formed a company around it after they graduated. The original business plan was to develop network-enabled JavaBean components, which the company named NetBeans. When the specification for Enterprise Java Beans came out, the group decided it made more sense to work with the standard rather than compete with it, but they kept the NetBeans name. By the end of 1999, Sun Microsystems (later acquired by Oracle) acquired the company and planned to make NetBeans their flagship Java toolset. During the acquisition by Sun Microsystems, the idea of open-sourcing NetBeans was discussed and in June 2000, netbeans.org was launched.

The NetBeans team introduced APIs (application programming interface) to make the IDE extensible. A NetBean module is a group of Java classes that provides an application with a specific feature including features for the IDE. In response to developers building applications using the NetBeans core runtime along with their own modules or plugins to make applications that were not development tools, the NetBeans group stripped out those pieces from the architecture that made the assumption that an application built on NetBeans was an IDE; this more easily enabled developers using the NetBeans platform to target a generic desktop application for any purpose. NetBeans evolved into a platform that supports web, enterprise, desktop, and mobile applications using the Java platform, as well as PHP, JavaScript and Ajax, Groovy and Grails, and C/C++.

In contrast, the Eclipse platform began as a project within IBM to provide a common platform to avoid duplicating the most common elements of software infrastructure for all IBM development products that were centered on Java development tooling. The vision was to enable a customer development environment that was composed of heterogeneous tools from IBM, the customer’s own toolbox, and third-party tools. Work began in 1998 on what eventually became the Eclipse platform. The team understood that the success of the platform needed broad adoption by third party vendors, and in response to the reluctance of business partners to invest in the as yet unproven platform the project adopted an open source licensing and operating model in 2001.

Despite adopting an open source licensing model, according to industry analysts at the time, the marketplace perceived Eclipse as an IBM-controlled effort, which made vendors reluctant to commit to the platform. In 2004, the Eclipse project was moved under the control of the newly created Eclipse Foundation, an independent non-profit organization with its own independent, paid staff that was supported by member company dues. It is around this time that companies serving the embedded market started evaluating the Eclipse platform for their own toolsets. Wind River (later acquired by Intel) was among the first companies to publicly commit to using the Eclipse platform. Since then, a growing list of companies (50+ according to the recent Eclipse 10 years of innovation announcement) serving the embedded community have adopted and used the Eclipse platform for their development tools.

In part 2, we will explore the reasons embedded tool developers are considering and, in many cases, migrating their development tools to an open source IDE platform.

One Picture Is Worth a Thousand Words

Wednesday, April 18th, 2012 by Max BaronThe San Jose Mercury News described Facebook’s recent acquisition of Instagram for $1 Billion as a deal that “surprised and stunned the tech world.” The surprise will, however, turn to shock and admiration once the free Instagram app is put though its paces. Shock at the program’s engineering simplicity and admiration for those that gained millions of followers and ultimately sold the app to Facebook for the ten-figure sum.

Designed for a cellular phone, the application works also on a tablet, such as iPad, but keeps its cell phone format. The program helps Instagram logged-in members to process and post their own photos as well as download images posted by other members.

Having loaded an existing image or a new one taken by using the incorporated camera controls, the Instagram app user can employ a number of simple image convolution filters to modify the image tint and luminosity. A preset contrast filter can also provide a feeling of sharpness enhancement, while area-specific convolution can introduce artificial focus. The application does not provide support for knowledgeable users to define their own convolution filters. The app supports basic image zooming, cropping and orientation. Users can also frame the image to be posted. Browsing through other members’ photos is supported among other features by a display of 20 thumb-prints, and a “like” vote button.

Facebook’s recent acquisition of Instagram for $1Billion reminds one of the time programs on the PC began to offer simple custom photo processing and later — support for sharing. Word processing and spreadsheets were not immediately dethroned but had to cede a seat in popularity to multimedia: still and video imaging plus sound and the processing required to do them justice. The popularity of Facebook and other community sites will accelerate the penetration of shared multimedia among mobile systems but in ways that are not exactly similar to the PC’s adaptation to multimedia workloads. This time around mobile multimedia will have to support existing quality requirements that were not immediately present for the cell phone or earlier for PC software. The present performance demand posed by high resolution images on tablets and cellphones is further magnified by the presence of high performance high pixel density digital cameras incorporated in tablets and in prosumer (professional-consumer) quality cameras.

Even higher performance is required by the mobile processing of video and sound that have barely been touched. Stand-alone camera manufacturers may want to introduce their own direct WiFi and cellular phone connection to help users share quality photos and video with mobiles whose displays will do them justice. Olympus is already offering a cell phone targeted Bluetooth module that can be attached to the auxiliary port present in some of the company’s new mirror-less cameras.

Accelerated by Facebook and others soon to follow, the trend to enhance and share photos and video on mobile systems will require the high image processing capabilities that can be delivered by SoCs employing multiple processing cores. Without them, the tablets and cell phones will feel much warmer than some are already feeling today—and the loss of energy will result in shorter battery life between charges. Many high performance cameras, having already learned the lesson, are employing multiple core SoCs of their own design or purchased from expert sources such as Ambarella. The resulting high quality images will have to be stored on higher capacity servers.

With the acquisition of Instagram, Facebook has started a trend that brings to mind the saying “one picture is worth a thousand words.” For Instagram, the acquisition means $1 Billion. For the semiconductor industry the trend can mean multiple billions of dollars.

How could easing restrictions for in-flight electronics affect designs?

Wednesday, March 28th, 2012 by Robert CravottaThe FAA (Federal Aviation Administration) has given permission to pilots on some airlines to use iPads in the cockpit in place of paper charts and manuals. In order to gain this permission, those airlines had to demonstrate that the tablet did not emit radio waves that could interfere with aircraft electronics. In this case, the airlines only had to certify the types of devices the pilots wanted to use rather than any of the devices that passengers would want to use. This type of requirements-easing is a first step toward possibly allowing electronics use during landings and takeoffs for passengers.

Devices, such as the Amazon Kindle, that use an E-ink display, can emit radio emissions that are less than 0.00003 volts per meter (according to tests conducted at EMT Labs) when in use – well under the 100 volts per meter of electrical interference that the FAA requires of airplanes. Even if every passenger was using a device with emissions this low, it would not exceed the minimum shielding requirements for aircraft.

A challenge though is whether allowing some devices versus others to operate throughout a flight would create situations where enough passengers might accidently leave on or operate their unapproved devices so that taken together – all of the devices might exceed the safety constraints for radio emissions.

On the other hand, being able to operate an electronic device throughout a flight would be a huge selling point for many people – and this could lead to further economic incentives for product designers to push their designs to those limits that could gain permission from the FAA.

Is the talk about easing the restrictions for using electronic gadgets during all phases of a flight wishful thinking or is the technology advancing far enough to be able to offer devices that can operate well below the safety limits for unrestricted use on aircrafts? I suspect this on-going dialogue between the FAA, airlines, and electronics manufacturers could yield some worthwhile ideas on how to ensure proper certification, operation, and failsafe functions within an aircraft environment that could make unrestricted use of electronic gadgets a real possibility in the near future. What do you think will help this idea along, and what challenges need to be resolved before it can become a reality?

When is running warm – too hot?

Wednesday, March 21st, 2012 by Robert CravottaManaging the heat emanating from electronic devices has always been a challenge and design constraint. Mobile devices present an interesting set of design challenges because unlike a server operating in a strictly climate controlled room, users want to operate their mobile devices across a wider range of environments. Mobile devices place additional design burdens on developers because the size and form factor of the devices restrict the options for managing the heat generated while the device is operating.

The new iPad offers the latest device where technical specifications may or may not be compatible with what users expect from their devices. According to Consumer Reports, the new iPad can reach operating temperatures that are up to 13 degrees higher (when plugged in) than an iPad 2 performing the same tasks under the same operating conditions. Using a thermal imaging camera, the peak temperature reported by Consumer Reports is 116 degrees Fahrenheit on the front and rear of the new iPad while playing Infinity Blade II. The peak heat spot was near one corner of the device (Image at the referenced article).

This type of peak temperature is perceived as warm to very warm to the touch for short periods of time. However, for some people, they may consider a peak temperature of 116 degrees Fahrenheit to be too warm for a device that they plan to hold in their hands or on their lap for extended periods of time.

There are probably many engineering trade-offs that were considered in the final design of the new iPad. The feasible options for heat sinks or distributing heat away from the device were probably constrained by the iPad’s thin form factor, dense internal components, and larger battery requirements. Integrating a higher pixel density display definitely provided a design constraint on how the system and graphic processing was architected to deliver an improvement in display quality and maintain an acceptable battery life.

Are consumer electronics bumping up against edge of what designers can deliver when balancing small form factors, high performance processing and graphics, acceptable device weight, and long enough battery life? Are there design trade-offs that are still available to designers to further push where mobile devices can go while staying within the constraints of acceptable heat, weight, size, cost, and performance? Have you ever dealt with running a warm system that becomes a system that is running too hot? If so, how did you deal with it?

Unit test tools and automatic test generation

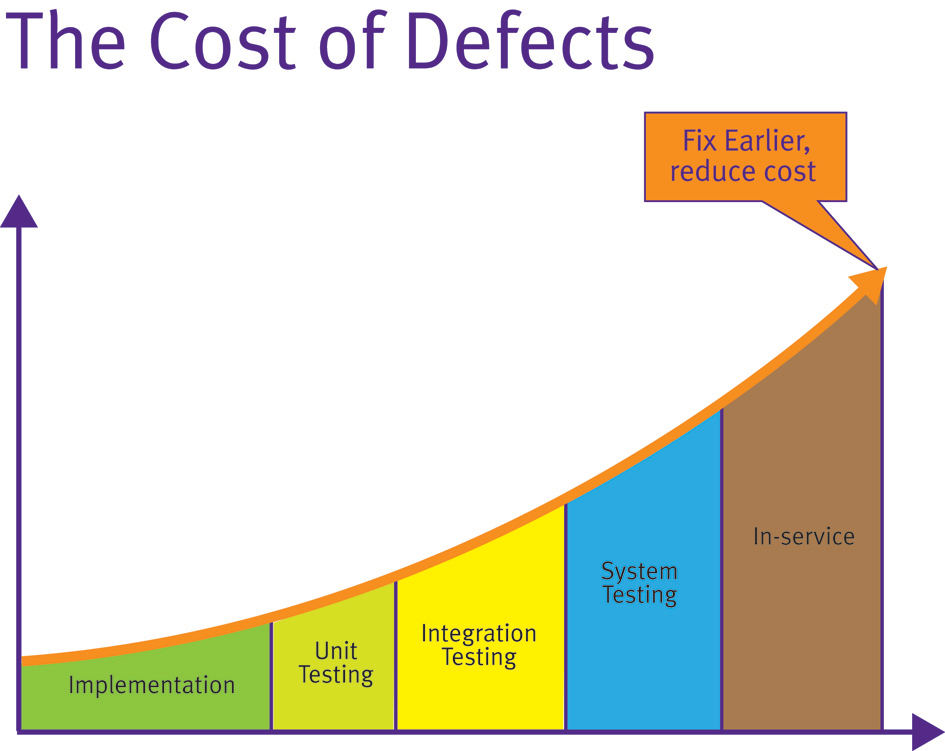

Monday, March 19th, 2012 by Mark PitchfordWhen are unit test tools justifiable? Ultimately, the justification of unit test tools comes down to a commercial decision. The later a defect is found in the product development, the more costly it is to fix (Figure 1). This is a concept first established in 1975 with the publication of Brooks’ “Mythical Man Month” and proven many times since through various studies.

The automation of any process changes the dynamic of commercial justification. This is especially true of test tools given that they make earlier unit test more feasible. Consequently, modern unit test almost implies the use of such a tool unless only a handful of procedures are involved. Such unit test tools primarily serve to automatically generate the harness code which provides the main and associated calling functions or procedures (generically “procedures”). These facilitate compilation and allow unit testing to take place.

The tools not only provide the harness itself, but also statically analyze the source code to provide the details of each input and output parameter or global variable in any easily understood form. Where unit testing is performed on an isolated snippet of code, stubbing of called procedures can be an important aspect of unit testing. This can also be automated to further enhance the efficiency of the approach.

This automation makes the assignment of values to the procedure under test a simpler process, and one which demands little intimate knowledge of the code on the part of the test tool operator. This distance enables the necessary unit test objectivity because it divorces the test process from that of code development where circumstances require it, and from a pragmatic perspective, substantially lowers the level of skill required to develop unit tests.

This ease of use means that unit test can now be considered a viable development option with each procedure targeted as it is written. When these early unit tests identify weak code, the code can be corrected immediately while the original intent remains fresh in the mind of the developer.

Automatically generating test cases

Generally, the output data generated through unit tests is an important end in itself, but this is not necessarily always the case. There may be occasions when the fact that the unit tests have successfully completed is more important than the test data itself. This happens when source code is tested for robustness. To provide for such eventualities, it is possible to use test tools to automatically generate test data as well as the test cases. High levels of code execution coverage can be achieved by this means alone, and the resultant test cases can be complemented by means of manually generated test cases in the usual way.

An interesting application for this technology involves legacy code. Such code is often a valuable asset, proven in the field over many years, but likely developed on an experimental, ad hoc basis by a series of expert “gurus” – expert at getting things done and in the application itself, but not necessarily at complying with modern development practices.

Frequently this SOUP (software of unknown pedigree) forms the basis of new developments which are obliged to meet modern standards either due to client demands or because of a policy of continuous improvement within the developer organization. This situation may be further exacerbated by the fact that coding standards themselves are the subject of ongoing evolution, as the advent of MISRA C:2004 clearly demonstrates.

If there is a need to redevelop code to meet such standards, then this is a need to not only identify the aspects of the code which do not meet them, but also to ensure that in doing so the functionality of the software is not altered in unintended ways. The existing code may well be the soundest or only documentation available and so a means needs to be provided to ensure that it is dealt with as such.

Automatically generated test cases can be used to address just such an eventuality. By generating test cases using the legacy code and applying them to the rewritten version, it can be proven that the only changes in functionality are those deemed desirable at the outset.

The Apollo missions may have seemed irrelevant at the time, and yet hundreds of everyday products were developed or modified using aerospace research—from baby formula to swimsuits. Formula One racing is considered a rich man’s playground, and yet British soldiers benefit from the protective qualities of the light, strong materials first developed for racing cars. Hospital patients and premature babies stand a better chance of survival than they would have done a few years ago, thanks to the transfer of F1 know-how to the medical world.

Likewise, unit testing has long been perceived to be a worthy ideal—an exercise for those few involved with the development of high-integrity applications with budgets to match. But the advent of unit test tools offer mechanisms that optimize the development process for all. The availability of such tools has made this technology and unit testing itself an attractive proposition for applications where sound, reliable code is a commercial requirement, rather than only those applications with a life-and-death imperative.

Which processor is beginner friendly?

Wednesday, March 14th, 2012 by Robert CravottaMy first experience with programming involved mailing punch cards to a computer for batch processing. The results of the run would show up about a week later; the least desirable result was finding out there was a syntax error in one of the cards. I moved up in the world when we gained access to a teletype that allowed us to enter the programs directly to the computer; however, neither of these experiences hinted at the true complexity that embedded programming would entail.

The Z80 was the first processor that I worked with that truly exposed the innards of the processor to me. A key reason for this was the substantial hobbyist community that had grown up around the Z80. I had (and still have in storage) a cornucopia of technical documents that exposed in detail every part of the system and ways to use them effectively. When I look back on those memories I marvel at the amount of information that was available despite the lack of any online connectivity – or in other words, no internet.

I found significant value in being able to examine other people’s code in real use applications. Today’s development support often includes application notes and sample code that addresses a wide range of use cases for a target processor. Online developer communities provide a valuable opportunity for developer’s to find example material, but even better, be able to query the community for examples of how to address a specific function with that target processor.

I would like to confirm that the specific capabilities of the processor are less important (because they all provide a minimum good set of functions) and that good development tools, tutorials and sample code, as well as responsive developer community support are more critical to a beginner.

Which processor (or processors) do you find to be beginner friendly or provide the right set of development support that make getting started with the processor faster and easier? Does using an RTOS, operating system, and/or middleware make this easier or harder? Which processors are the best examples of the type of developer community support you find most valuable?

Are random numbers a solved function?

Wednesday, March 7th, 2012 by Robert CravottaRNGs (Random number generators) have been used across a wide range of applications for many decades. They can be implemented in a variety of forms. Pure software algorithms enable using a specific sequence of “random” numbers at a later time, such as when performing simulation functions or debugging a system, by tracking the same seed value for the algorithm. Some processors include a hardware random number generator to provide as sequence of numbers that are as close to a true random sequence as possible.

However, the suitability of a sequence of random numbers can vary based on the context of the application that is using them. For example, devices that select random tracks of music to play have undergone an evolution from a true random sequence to one that strips out repetitive appearances of the same number that occur too close to one another in the sequence.

Are random number generators a solved function for system developers? Because not all RNGs are equal in their randomness, does that affect a porting effort when moving not just from one processor to another, but from one software development toolset to another? Have you been bitten by assumptions about an RNG that turned out to be horribly unsuitable to your application, or are RNGS mature enough that such horror stories are a thing of the past?