The market for tablet computers is exploding, with an estimated 100 million units that will be in use by 2013. The tablet form factor is very compelling because of its small size, light weight, and long battery life. Also, tablet operating systems, like Android, MeeGo, and iOs, have been designed so the input mechanism is touch-oriented, making their applications fun and easy to use.

One such tablet, the Apple iPad, has been a phenomenal success as a media-consumption device for watching movies, reading e-mail, surfing the Web, playing games, and other activities. But one thing is missing to make it the only device you need when going on a business trip: pen input. Pen input facilitates handwriting and drawing, which is important because our brain connectivity and the articulation in our arms, elbows, wrists, and fingers give us much greater motor skills when we use a tool. Everybody needs a pen, including children learning how to draw and write, engineers sketching a design, and business people annotating a contract.

Accordingly, the ultimate goal for tablet designers should be to replicate the pen-and-paper experience. Pen and paper work so well together: resolution is high, there is no lag between the pen movement and the depositing of ink, and you can comfortably rest your palm on the paper, with its pleasing texture.

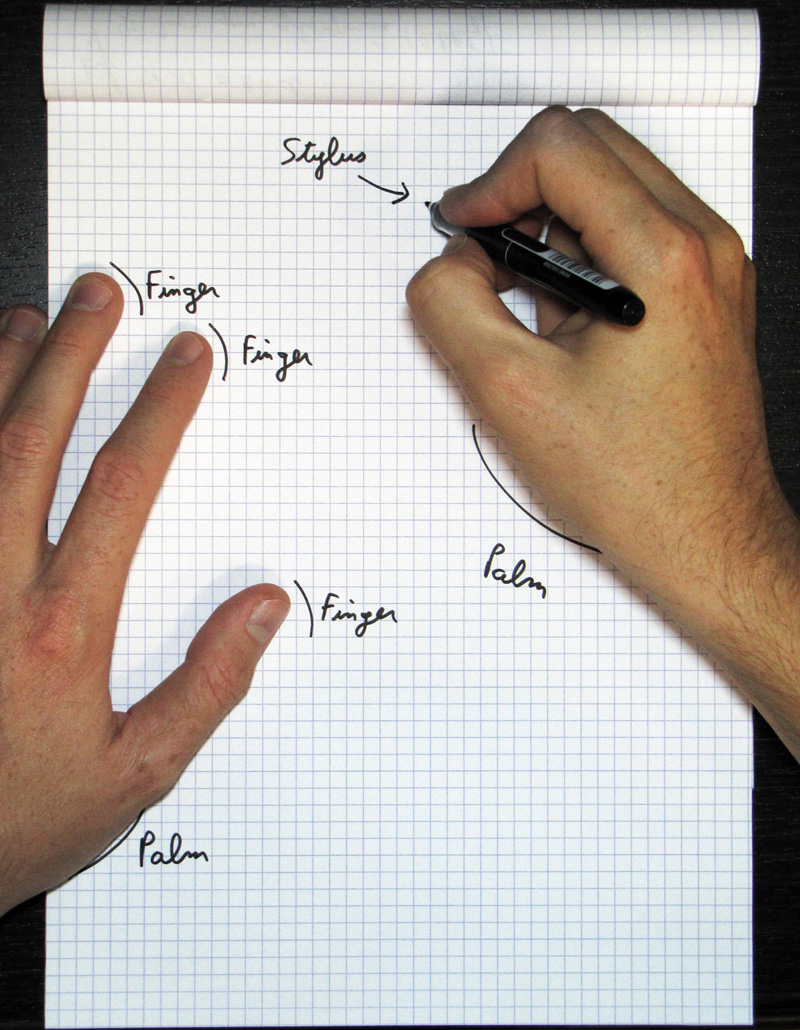

These qualities, however, are not easy to replicate in a tablet computer. You need high responsiveness, high resolution, good rendering, and palm rejection. Writing on a piece of glass with a plastic stylus is not an especially pleasing experience, so you also need coating on the glass and a shape and feel of a stylus that can approximate the pen and paper experience. Most importantly, you need an operating system and applications that have been designed from the ground up to integrate this input approach.

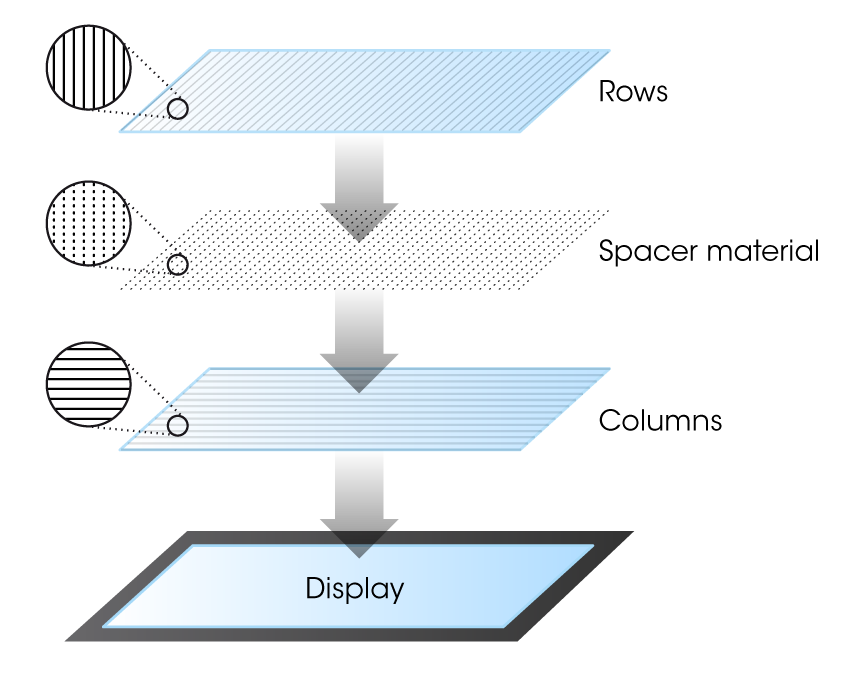

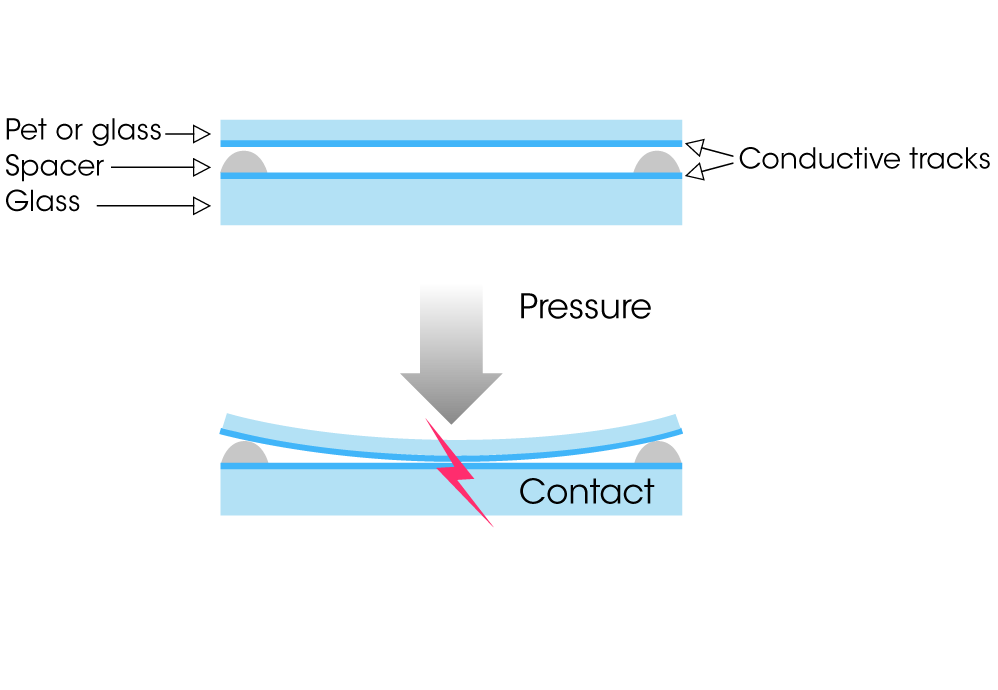

Interpolated voltage sensing matrix (iVSM) is a multi-touch technology that provides smart detection, including handwriting rendering and palm rejection. It can allow users to simultaneously move a pen or a stylus (and an unlimited number of fingers) on a screen. iVSM is similar to projected capacitive technology, as it employs conductive tracks patterned on two superimposed substrates (made of glass or hard plastic). When the user touches the sensor, the top layer slightly bends, enabling electrical contact between the two patterned substrates at the precise contact location (Figure 3). A controller chip scans the whole matrix to detect such contacts, and will track them to deliver cursors to the host. However, whereas capacitive technology relies on proximity sensing, iVSM is force activated, enabling it to work with a pen, a stylus, or any number of implements.

The tablet computer revolution is well underway around the world, with handwriting becoming an increasingly necessary function. Accordingly, device designers and vendors should take proper heed, or they might soon be seeing the handwriting on the wall.

Tags: Handwriting Input, Tablets

“Accordingly, the ultimate goal for tablet designers should be to replicate the pen-and-paper experience.”

I disagree. (I realize that you were primarily referring to the immediate interface, but consideration of the higher levels may well be important in the implementation of the lower levels besides the implications on the amount and type of processing power desired.) Having a dynamic display provides opportunities not available to pen-and-paper. E.g., a tablet could recognize geometric shapes as well as symbols and render them more neatly than they are drawn. A tablet can also provide zoom-in and zoom-out as well as a virtual sheet of effectively unlimited size. Erasure of large areas should also be easier on a tablet (one might want strike-through or crossing-out to implement erasing) as would be cut-and-paste and moving blocks of content (this would include the ability to write content, put it inside a rectangle, e.g., and have it automatically resized to fit). Hyperlinks to other documents and dynamic adjustment of detail (something like inlining footnotes could be useful–I find that when I write down thoughts I encounter side-thoughts which belong with the main thought group but are peripheral, explanatory, or otherwise not appropriate to a higher-level view [in writing this leads to parenthetical comments within parenthetical comments]). Layering could also be used–somewhat like transparencies–to conveniently express certain relationships of information. In addition, changing the color and size of the ‘pen’ should be much easier than for traditional pen-and-paper (as well as flood-fill). (Also providing lines or a grid could be useful, with the ability to hide such temporarily as well as to make objects snap-to-grid.)

One problem with having the computer make the writing more like typing in appearance is that delayed feedback could be annoying (in particular knowing whether a particular writing is legible to the computer is important [having a quick way to correct or even merely constrain the computer's choices could be good]) but early guesses by the computer could be even more annoying. (In some cases, off-line interpretation of writings could be useful as such could use a larger context, use much more computation, and provide feedback about uncertain aspects of a ‘translation’ [such feedback would be distracting during composition but could be preferred over making assumptions when the writer is in an editing/review mindset].)

(Human-computer interfaces could also be improved by exploiting user context [e.g., this user often draws flowcharts, that user often sketches biological structures] and document context, ideally with minimal additional human input and the ability to explicitly correct errors/change modes with minimal effort. Being able to provide vocal input could also be useful, but such is limited by the need to avoid the voicing being heard by others [whether for privacy or to avoid annoyance]. A virtual reality helmet [or even a mask over the mouth] would probably be too cumbersome and unfashionable for most users and sound cancellation would probably not be practical technologically in the near term. [A helmet would have the additional advantage that a set of relatively large antennae could be included. Combining the protective elements of a traditional helmet with the above I/O aspects could be useful--there may already be motorcycle helmets that would facilitate listening to a radio and talking on a cell phone while riding a motorcycle. {On a science fictional note, a constant covering of the face could remove some social cues, possibly reducing effective racism, and allow more conscious control of one's presentation of oneself--coloration and patterning could be dynamically changed to express mood and voice synthesis could hide or transform vocal cues, one might even have most communication be directed rather than broadcast as current vocal communication is.}]