Touchscreens require more from the UI (user interface) design and development methodologies. To succeed in selecting the right technology, designers should always consider the following important topics.

1) All-inclusive designer toolkit. As the touchscreen changes the UI paradigm, one of the most important aspects of the UI design is how quickly the designer can see the behavior of the UI under development. Ideally, this is achieved when the UI technology contains a design tool that allows the designer to immediately observe behavior of the newly created UI and modify easily before target deployment.

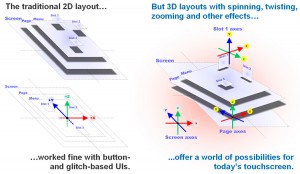

2) Creation of the “wow factor.” It is essential that UI technology enables developers and even end-users to easily create clever little “wow factors” on the touchscreen UI. These technologies, which allow the rapid creation and radical customization of the UI, have a significant impact on the overall user experience.

3) Controlling the BoM (Bill of Material). For UIs, everything is about the look and feel, ease of use, and how well it reveals the capabilities of the device. In some situations, adding a high-resolution screen with a low-end processor is all that’s required to deliver a compelling user experience. Equally important is how the selected UI technology reduces engineering costs related to UI work. Adapting a novel technology that enables the separation of software and UI creation enables greater user experiences without raising the BoM.

4) Code-free customization. Ideally, all visual and interactive aspects of a UI should be configurable without recompiling the software. This can be achieved by providing mechanisms to describe the UI’s characteristics in a declarative way. Such a capability affords rapid customization without any changes to the underlying embedded code base.

5) Open standard multimedia support. In order to enable the rapid integration of any type of multimedia content into a product’s UI (regardless of the target hardware) some form of API standardization must be in place. The OpenMAX standard addresses this need by providing a framework for integrating multimedia software components from different sources, making it easier to exploit silicon-specific features, such as video acceleration.

Just recently, Apple replaced Microsoft as the world’s largest technology company. This is a good example of how a company that produces innovative, user-friendly products with compelling user interfaces can fuel the growth of technology into new areas. Remember, the key isn’t necessarily the touchscreen itself – but the user interfaces running on the touchscreen. Let’s see what the vertical markets can do to take the user interface and touchscreen technology to the next level!