The 9th annual Research@Intel Day held at the Computer History Museum showcased more than 30 research projects demonstrating the company’s latest innovations in the areas of energy, cloud computing, user experience, transportation, and new platforms.

Intel CTO Justin Rattner made one of the most interesting announcements about the creation of a new research division called Interaction and Experience Research (IXR).

I believe IXR’s task will be to determine the nature of the next must-have system and the processors and interfaces that will make it successful.

According to Justin Rattner, you have to go beyond technology; better technology is no longer enough since individuals nowadays value a deeply personal, information experience. This suggests that Intel’s target is the individual, the person that could be a consumer and /or a corporate employee. But how do you find out what the individual that represents most of us will need beyond the systems and software already available today?

To try to hit that moving target, Intel has been building up its capabilities in the user experience and interaction areas since the late nineties. One of the achievements was the Digital Health system, now a separate business division. It started out as a research initiative in Intel Labs – formerly the “Corporate Technology Group” (CTG), with an objective of finding how technology could help in the health space.

Intel’s most recent effort has been to assemble the IXR research team consisting of both user interface technologists and social scientists. The IXR division is tasked to help define and create new user experiences and platforms in many areas some of which are end-use of television, automobiles, and signage. The new division will be led by Intel Fellow Genevieve Bell–a leading user-centered design advocate at Intel for more than ten years.

Genevieve Bell is a researcher. She was raised in Australia. She received her bachelor’s degree in Anthropology from Bryn Mawr College in 1990 and her master’s and doctorate degrees in Anthropology in 1993 and 1998 from Stanford University where she also was a lecturer in the Department of Anthropology. In her presentation, Ms. Bell explained that she and her team will be looking into the ways in which people use, re-use and resist new information and communication technologies.

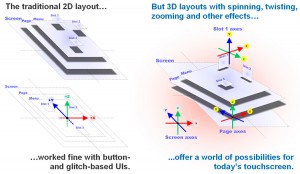

To envision the next must have system, Intel’s IXR division is expected to create a bridge of research incorporating social research, design enabling and technology research. The team’s social science, design, and human-computer interaction researchers will continue the work that’s already been going on, by asking questions to find what people will value and what will fit into their lives. New systems, software, user interactions and changes in media content and consumption could emerge from using the obtained data on one hand, and the research into the next generation of user interfaces on the other.

Bell showed a photo of a child using his nose to key in data on his mobile—an example of a type of user-preferred interface that may seem strange, but it can be part of the data used by social scientists to define an innovation that may create the human I/Os for 2020. The example also brought out a different aspect that was not addressed: how do you obtain relevant data without placing in the hands of your population sample a scale model or an actual system to help review it, improve it or even reject it and start from scratch?

In addition to the very large investment Intel makes in US-based research it also owns labs, and it collaborates with or supports over 1,000 researchers worldwide. According to Intel, 80% of Intel Labs China focuses on embedded system research. Intel Labs Europe conducts research that spans the wide spectrum from nanotechnologies to cloud computing. Intel Labs Europe’s website shows research locations and collaboration in 17 sites – and the list doesn’t even include the recent announcement of the ExaScience Lab in Flanders, Belgium.

But Intel is not focused only on the long term. Two examples that speak of practical solutions for problems encountered today are the Digital Health system that can become a link between the patient at home and doctor at the clinic, and the connected vehicle (misinterpreted by some reporters as an airplane-like black box intended for automobiles).

In reality, according to Intel, the connected vehicle’s focus was on the personal and vehicle safety. For example, when an attempt is made to break into the vehicle, captured video can be viewed via the owner’s mobile device. Or, for personal safety and experience, a destination-aware connected system could track vehicle speed and location to provide real-time navigation based on information about other vehicles and detours in the immediate area.

Both life-improving systems need to find wider acceptance from the different groups of people that perceive their end-use in different ways.

Why is Intel researching such a wide horizon of disciplines and what if anything is still missing? One of the answers has been given to us by Justin Rattner himself.

I believe that Rattner’s comment “It’s no longer enough to have the best technology,” reflects the industry’s trend in microprocessors, cores, and systems. The SoC is increasingly taking the exact configuration of the OEM system that will employ it. SoCs are being designed to deliver precisely the price-performance needed at the lowest power for the workload—and for most buyers the workload has become a mix of general-purpose processing and multimedia, a mix in which the latter is dominant.

The microprocessor’s role can no longer be fixed or easily defined since the SoCs incorporating it can be configured in countless ways to fit systems. Heterogeneous chips execute code by means of a mix of general purpose processors, DSPs and hardwired accelerators. Homogeneous SoC configurations employ multiple identical cores that together can satisfy the performance needed. And, most processor architectures have not been spared; their ISAs are being extended and customized to fit target applications.

Special-purpose ISAs have emerged –trying and most of the time, succeeding in reducing power and silicon real-estate for specific applications. Processor ISA IP owners and enablers are helping SoC architects that want to customize their core configuration and ISA. A few examples include ARC (now Viraje Logic and possibly soon–Synopsis), Tensilica, and suppliers of FPGAs such as Altera and Xilinx. ARM and MIPS offer their own flavors of configurability. ARM is offering so many ISA enhancements available in different cores that aside from its basic compatibility, it can be considered as a “ready-to-program” application-specific ISA while MIPS leaves most of its allowed configurability to the SoC architect.

In view of the rapidly morphing scenario, the importance of advanced social research for Intel and, for that matter for anybody in the same business cannot be overstated. Although it may not be perceived as such, Intel has already designed processors to support specific applications.

The introduction of the simple, lower performance but power-efficient Atom core was intended to populate the mobile notebook and net book. The Moorestown platform brings processing closer to the needs of mobile low power systems while the still-experimental SCC –Intel’s Single-chip Cloud Computer is configured to best execute data searches in servers.

It’s also interesting to see what can be done with an SCC-like chip employed in tomorrow’s desktop.

If Intel’s focus is mainly on the processing platform as it may be, what seems to be missing and who is responsible for the rest? The must-have system of the future must run successful programs and user interface software. While Intel is funding global research that’s perceived to focus mostly on processors and systems–who is working on the end-use software? I don’t see the large software companies engaging in similar research by themselves or in cooperation with Intel’s or other chip and IP companies’ efforts. And we have not been told precisely how a new system incorporating hardware and software created by leading semiconductor and software scientists will be transferred from the draft board to system OEMs. An appropriate variant on Taiwan’s ITRI model comes to mind but only time will tell.