In a recent conversation with Ken Maxwell, President of Blue Water Embedded, Ken mentioned several times how third-generation touch controllers are applying dedicated hardware resources to encapsulate and offload some of the processing necessary to deliver robust touch interfaces. We talked about his use of the term third-generation as he seemed not quite comfortable with using it. However, I believe it is the most appropriate term, is consistent with my observations about third generation technologies, and is the impetus for me doing this hands-on project with touch development kits in the first place.

While examining technology’s inflections, I have noticed that technological capability is only one part of an industry inflection based around that technology. The implementation must also: hide complexity from the developer and user; integrate subsystems to deliver lower costs, shrink schedules, and simplify learning curves; as well as pull together multi-domain components and knowledge into a single package. Two big examples of inflection points occurred around the third generation of the technology or product: Microsoft Windows and the Apple iPod.

Windows reached an inflection point at version 3.0 (five years after version 1.0 was released) when it simplified the management of the vast array of optional peripherals available for the desktop PC and hid much of the complexity of sharing data between programs. Users could already transfer data among applications, but they needed to use explicit translation programs and tolerate the loss of data from special features. Windows 3.0 hid the complexity of selecting those translation programs and provided a data-interchange format and mechanism that further improved users’ ability to share data among applications.

The third generation iPod reached an industry inflection point with the launch of the iTunes Music Store. The “world’s best and easiest to use ‘jukebox’ software” introduced a dramatically simpler user interface that needed little or no instruction to get started and introduced more people to digital music.

Touch interface controllers and development kits are at a similar third generation crossroads. First-generation software drivers for touch controllers required the target or host processor to drive the sensing circuits and perform the coordinate mapping. Second-generation touch controllers freed up some of the processing requirements of the target processor by including dedicated hardware resources to drive the sensors, and it abstracted the sensor data the target processor worked with to pen-up/down and coordinate location information. Second generation controllers still require significant processing resources to manage debounce processing as well as reject bad touches such as palm and face presses.

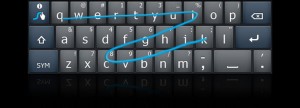

Third-generation touch controllers integrate even more dedicated hardware and software to offload more context processing from the target processor to handle debounce processing, reporting finger or pen flicking inputs, correctly resolving multi-touch inputs, and rejecting bad touches from palm, grip, and face presses. Depending on the sensor technology, third-generation controllers are also going beyond the simple pen-up/down model by supporting hover or mouse-over emulation. The new typing method supported by Swype pushes the pen-up/down model yet another step further by combining multiple touch points within a single pen-up/down event.

Is it a coincidence that touch interfaces seem to be crossing an industry inflection point with the advent of third generation controllers, or is this a relationship that also manifests in other domains? Does your experience support this observation?

[Editor's Note: This was originally posted on Low-Power Design]