The new Microsoft Surface 2.0 will become available to the public later this year. The technology has undergone significant changes from when the first version was introduced in 2007. The most obvious change is that the dimensions of the newer unitis much thinner, so much so, that the 4 inch thick display can be wall mounted – effectively enabling the display to act like a large-screen 1080p television with touch capability.Not only is the new display thinner, but the list price has nearly halved to $7600. While the current production versions of the Surface are impractical for embedded developers, the sensing technology is quite different from other touch technologies and may represent another approach to user touch interfaces that will compete with other forms of touch technology.

Namely, the touch sensing in the Surface is not really based on sensing touch directly – rather, it is based on using IR (infrared) sensors the visually sense what is happening around the touch surface. This enables the system to sense and be able to interact with nearly any real world object, not just conductive surfaces such as with capacitive touch sensing or physical pressure such as with resistive touch sensing. For example, there are sample applications of the Surface working with a real paint brush (without paint on it). The system is able to identify objects with identification markings, in the form of a pattern of small bumps, to track those objects and infer additional information about them that other touch sensing technologies currently cannot do.

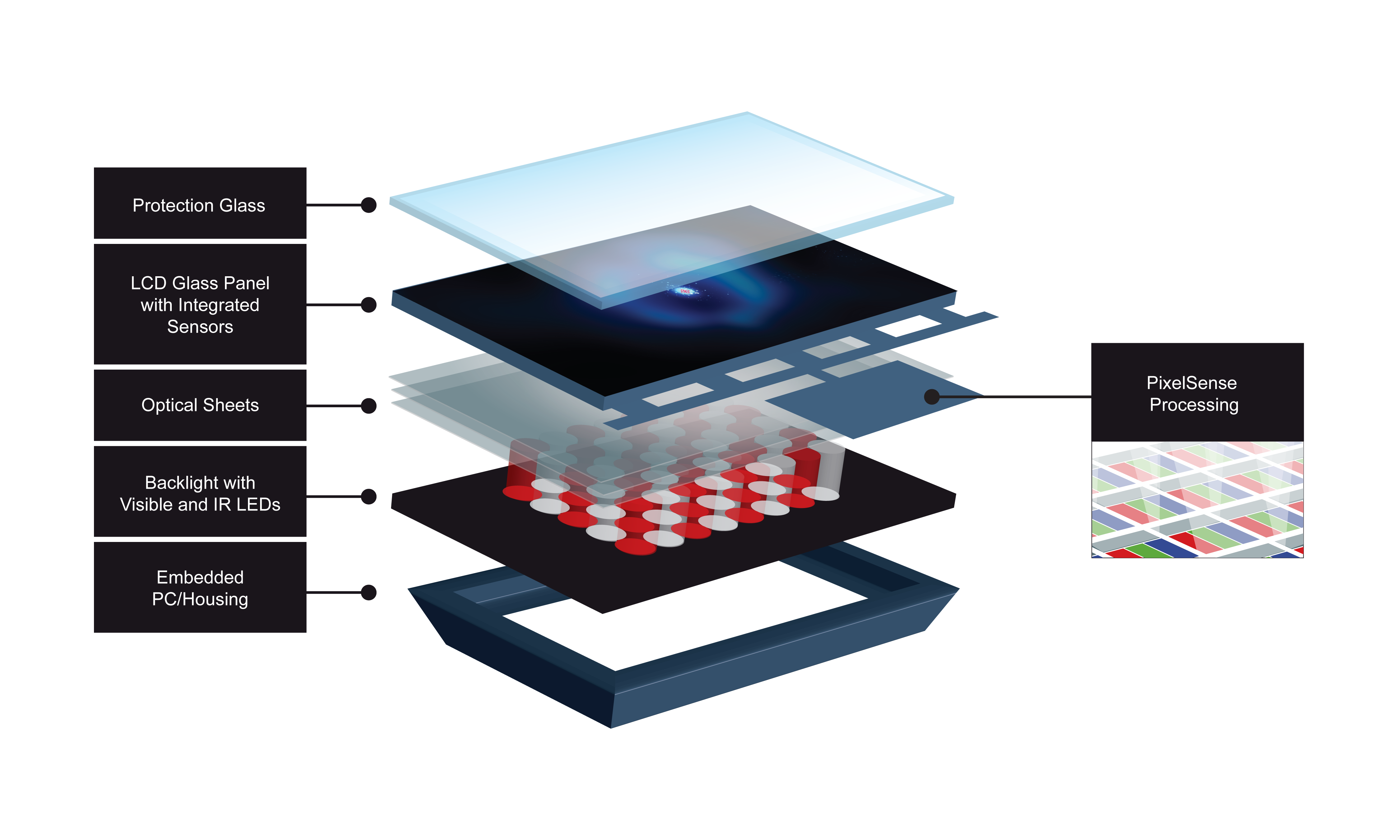

This exploded view of the Microsoft Surface illustrate the various layers that make up the display and sensing housing of the end units. The PixelSense technology is embedded into the LCD layers of the display.

The vision sensing technology is called PixelSense Technology, and it is able to sense the outlines of objects that are near the touch surface and distinguish when they are touching the surface. Note: I would include a link to the PixelSense Technology at Microsoft, but it is not available at this time. The PixelSense Technology embedded in the Samsung SUR40 for Microsoft Surface replaces the five (5) infrared cameras that the earlier version relies on. The SUR40 for Microsoft Surface is the result of a collaborative development effort between Samsung and Microsoft. Combining the Samsung SUR40 with Microsoft’s Surface Software enables the entire display to act as a single aggregate of pixel-based sensors that are tightly integrated with the display circuitry. This shift to an integrated sensor enables the finished casing to be substantially thinner than previous versions of the Surface.

The figure highlights the different layers that make up the display and sensing technology. The layers are analogous to any LCD display except that the PixelSense sensors are embedded in the LCD layer and do not affect the original display quality. The optical sheets include material characteristics to increase the viewing angle and to enhance the IR light transmissivity. PixelSense relies on the IR light generated at the backlight layer to detect reflections from objects above and on the protection layer. The sensors are located below the protection layer.

The Surface software targets an embedded AMD Athlon II X2 Dual-Core Processor operating at 2.9GHz and paired with an AMD Radeon HD 6700M Series GPU using DirectX 11 acting as the vision processor. Applications use an API (application program interface) to access the algorithms contained within the embedded vision processor. In the demonstration that I saw of the Surface, the system fed the IR sensing to a display where I could see my hand and objects above the protection layer. The difference between an object hovering over and touching the protection layer is quite obvious. The sensor and embedded vision software are able to detect and track more than 50 simultaneous touches. Supporting the large number of touches is necessary because the use case for the Surface is to have multiple people issuing gestures to the touch system at the same time.

This technology offers exciting capabilities for end-user applications, but it is currently not appropriate, nor available, for general embedded designs. However, as the technology continues to evolve, the price should continue to drop and the vision algorithms should mature so that they can operate more efficiently with less compute performance required of the vision processors (most likely due to specialized hardware accelerators for vision processing). The ability to be able to recognize and work with real world objects is a compelling capability that the current touch technologies lack and may never acquire. While the Microsoft person I spoke with says the company is not looking at bringing this technology to applications outside the fully integrated Surface package, I believe the technology will become more compelling for embedded applications sooner rather than later. At that point, experience with vision processing (different from image processing) will become a valuable skillset.

Tags: Color Display, Pixel Sense, Touch Interface, Vision Sensing

HI,

This particular technology is about inducing touch sensing within the surface itself. But if there is some technology which is independent from modifying the screen properties and is used only as an add-on to an existing system wont it be better?

Regards,

sreesha