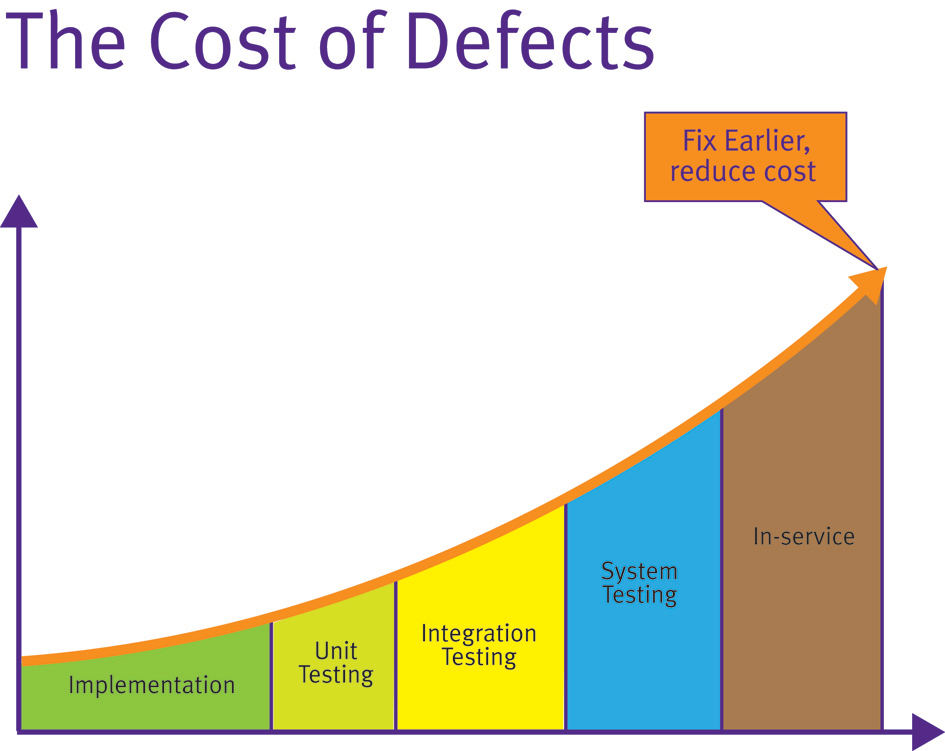

When are unit test tools justifiable? Ultimately, the justification of unit test tools comes down to a commercial decision. The later a defect is found in the product development, the more costly it is to fix (Figure 1). This is a concept first established in 1975 with the publication of Brooks’ “Mythical Man Month” and proven many times since through various studies.

The automation of any process changes the dynamic of commercial justification. This is especially true of test tools given that they make earlier unit test more feasible. Consequently, modern unit test almost implies the use of such a tool unless only a handful of procedures are involved. Such unit test tools primarily serve to automatically generate the harness code which provides the main and associated calling functions or procedures (generically “procedures”). These facilitate compilation and allow unit testing to take place.

The tools not only provide the harness itself, but also statically analyze the source code to provide the details of each input and output parameter or global variable in any easily understood form. Where unit testing is performed on an isolated snippet of code, stubbing of called procedures can be an important aspect of unit testing. This can also be automated to further enhance the efficiency of the approach.

This automation makes the assignment of values to the procedure under test a simpler process, and one which demands little intimate knowledge of the code on the part of the test tool operator. This distance enables the necessary unit test objectivity because it divorces the test process from that of code development where circumstances require it, and from a pragmatic perspective, substantially lowers the level of skill required to develop unit tests.

This ease of use means that unit test can now be considered a viable development option with each procedure targeted as it is written. When these early unit tests identify weak code, the code can be corrected immediately while the original intent remains fresh in the mind of the developer.

Automatically generating test cases

Generally, the output data generated through unit tests is an important end in itself, but this is not necessarily always the case. There may be occasions when the fact that the unit tests have successfully completed is more important than the test data itself. This happens when source code is tested for robustness. To provide for such eventualities, it is possible to use test tools to automatically generate test data as well as the test cases. High levels of code execution coverage can be achieved by this means alone, and the resultant test cases can be complemented by means of manually generated test cases in the usual way.

An interesting application for this technology involves legacy code. Such code is often a valuable asset, proven in the field over many years, but likely developed on an experimental, ad hoc basis by a series of expert “gurus” – expert at getting things done and in the application itself, but not necessarily at complying with modern development practices.

Frequently this SOUP (software of unknown pedigree) forms the basis of new developments which are obliged to meet modern standards either due to client demands or because of a policy of continuous improvement within the developer organization. This situation may be further exacerbated by the fact that coding standards themselves are the subject of ongoing evolution, as the advent of MISRA C:2004 clearly demonstrates.

If there is a need to redevelop code to meet such standards, then this is a need to not only identify the aspects of the code which do not meet them, but also to ensure that in doing so the functionality of the software is not altered in unintended ways. The existing code may well be the soundest or only documentation available and so a means needs to be provided to ensure that it is dealt with as such.

Automatically generated test cases can be used to address just such an eventuality. By generating test cases using the legacy code and applying them to the rewritten version, it can be proven that the only changes in functionality are those deemed desirable at the outset.

The Apollo missions may have seemed irrelevant at the time, and yet hundreds of everyday products were developed or modified using aerospace research—from baby formula to swimsuits. Formula One racing is considered a rich man’s playground, and yet British soldiers benefit from the protective qualities of the light, strong materials first developed for racing cars. Hospital patients and premature babies stand a better chance of survival than they would have done a few years ago, thanks to the transfer of F1 know-how to the medical world.

Likewise, unit testing has long been perceived to be a worthy ideal—an exercise for those few involved with the development of high-integrity applications with budgets to match. But the advent of unit test tools offer mechanisms that optimize the development process for all. The availability of such tools has made this technology and unit testing itself an attractive proposition for applications where sound, reliable code is a commercial requirement, rather than only those applications with a life-and-death imperative.

Tags: Automatic Test Generation, SOUP, Unit Test

Hello Mark,

1. I totally agree: the cost to fix a defect exponentially increase with the time going on. This is the only thing I can agree. In the rest of the article there is a real misconception of the difference between a “unit test” and “a test made by a developer”. Also the graphic vision is typical for non agile development strategies, the same steps can be performed in an agile way following the continuous development principle that means also continuous build, continuous test, continuous integration and continuous deployment.

2. Being an agile developer in the embedded market I can’t agree about unit test general meaning inside the article. A unit test case without adopting a test driven development or better a behaviour driven development cycle is a false solution simply because it can’t assure that I write the source code I really need to meet the requirement I need to test.

3. The missing point of the article is the more important advantage of the unit tests: the direct link between requirements and unit tests. If there isn’t the link, unit tests are just the same as the developer tests (most done with a debugger and so not quite traceable) with the only benefit to show what tests were performed by the developer. This means that the developer missed the necessity to have executable requirements agreed with the customer (the customer may be also the internal product manager).

Writing the unit test code after the piece of software to test is simply wrong as writing requirements after the application software. An unit test function has to be written before the code to test simply because it is the “executable translation” of a requirement, so if we agree that requirements have to be written before the application we have to agree that unit test functions have to be written before the code to test.

4. Automatic test generation for non legacy code is only a defensive strategy (for the management) to try to enforce a better quality software without increasing development costs because management tend to think that unit tests are like more software to write and so they increase the SLOC or the function point (or every other metric they use) needed to develop the product. I know managers that computed the ratio between the application code to the unit test and acceptance test code to show the increasing cost of development to don’t embrace agile development strategy and so the underlying unit test strategy.

5. About legacy code: if a company reuses its software it is a commom good sense practice because a working software is part of the company know-how, it was an investment and it has to be used as more as possible. Generally companies procedures require more documentation than tests to reuse sw, but the real problem is how much of this know-how is well tested and how much developers are able to reuse it?

Developers should learn about Ariane 5 and Therac software errors to improve their way to reuse the source code. Both failures would be discovered in the early stage of the projects using BDD and TDD approachs.

So if I am not sure that a piece of legacy software could help me I simply write my application using BDD and when I have to pass my test I integrate the legacy module (I mean a class a package or a function); if it pass the test it is ok, if not I will correct it and update in the source version repository (I put also the tests used to verify the code) for the benefit of all the developers. This is the best secure source code reuse practice I learned in the last 25 years.

6. Using an auto generated unit test fixture to test legacy code has the very bad effect that the developer thinks to have a safe piece of source code (supposing all tests passed) but this is not true especially in the embedded world. Porting a well done and working piece of code (also well tested following the ESA test procedures) to a different processor was the real cause of the Ariane 5 fault (it was auto distrupted at its 1st flight with all the payload) but it passed all the original tests (they weren’t updated thaking account the different data size of the Ariane5 and Ariane4 in the calling function).

7. About unit test “commercially attractive” due to automatic test generation: this is just the last try to sell what I call “the last failing silver bullet application”. A company will do best business if it will develop and sell high quality products with zero or very low count defects with the correct price/benefit ratio in the time window of the market opportunity that for hi tech products is always shortening and this sort of business culture goes well over the unit test automation tools.

Massimo Manca: Thank you for your comments.

You may be surprised that I agree entirely with your points for the market I suspect that you work in. This article deals specifically with the automatic generation of unit tests and that fact that they make the relevant tools accessible to people who cannot generally justify the investment you imply, which is perhaps the point you miss.

From a commercial perspective, software (like anything else) needs to be of adequate quality. That doesn’t necessarily always mean the best quality – there are all sorts of commercial judgements to make such as the cost of failure and time to market. It is analogous to the fact that if a small Fiat (or Ford, or Skoda, or whatever) was made to the same quality standards as a Rolls-Royce then it would become far too expensive to compete.

Likewise, not everyone is writing safe code. Not everyone is working to the levels of excellence you are clearly aspiring to; for some people, a reassurance by this means is a step forward from their present practise of functional test only. That doesn’t necessarily make them wrong. It may just mean that they have different criteria to work by.

I’d encourage you to look at other articles from myself in particular and LDRA in general, and you will see that our sphere of influence extends through the requirements traceability you mentioned and on to object code verification which goes beyond your observations in pursuit of excellence when that is required.

Hello Mark,

did you read what NASA team said about the unwanted acceleration of some Toyota cars? It said that there are no possibility with actual testing tools to find the error and also if there is an error. This is not a good information and I am very suprised the team said this.

Next week I will read the NASA report about the software inspection and I will say something more.

The problem is exactly how much does it cost to develop a software/firmware in agile way and in more traditional way?

Doing agile development lower the development costs between 30% to 70% depending by the embedded sub market and this because it shorts the development time by a magnitude.

I don’t work in a single market, most of the times I work in industrial, little appearances, white goods and some times automotive market but also in other markets and I also find to help companies to solve the problems they already have on existing products.

With today embedded sw complexity without a good testing practice you may achieve about 15 to 50 errors in 1000 C/C++ SLOC. Only to solve the 20% of these errors you would make happy about 80% customers (Pareto law). But how much does it costs to correct these errors? In embedded markets seems that delivering a product with 20% less errors will rise the entire development to about 3 times then developing products with agile techniques fro the beginning and without considering customer service costs for both.

With traditional development cycle a C/C++ SLOC may cost between 15$ and 40$ in USA, with agile techniques it is about 6$ to 9$. And with a good agile development starting from executable requirements we have about 1 to 3 errors per 100000 errors that we normally correct during the continuous building/testing of the application (so these are the only errors leaved by a TDD/BDD unit tests fixture) so we don’t add many weeks to solve application errors.

Many times I had the idea to take the original software and rewrite it from the beginning because the bad quality and poor testing it had. I learned that it pays if the product is not so bigger, may be < 100000 SLOC (C or C++ code) and if you have 2 or 3 months to work on it.

If the project is bigger normally pays better to define executable acceptance tests from the requirements (better after a short revision) to represent the requirements, prioritizing them and execute them to find and solve one bug at time.

The project quality increase day by day and the customer will be confident I will have a solution.

In the past I checked LDRA solution, I also used on a customer site they are attractive but they didn't help so much me, only MISRA-C conformance tests help me to don't manually check the code row by row.

To find difficult bugs doing also limited budget of many customers I prefer to instrument the source code also at C instruction level (yes, I mean if, while for and so on) and save the debug output on an internal or external file. Also code coverage helps for C source code but not so much for C++ if you use a lot of templates, and class hierarchy/derivation.

So the problem is not on the tools but in this particular practice that is the automatic unit test generation, it is not the good tests to generate automatically.