The market for tablet computers is exploding, with an estimated 100 million units that will be in use by 2013. The tablet form factor is very compelling because of its small size, light weight, and long battery life. Also, tablet operating systems, like Android, MeeGo, and iOs, have been designed so the input mechanism is touch-oriented, making their applications fun and easy to use.

One such tablet, the Apple iPad, has been a phenomenal success as a media-consumption device for watching movies, reading e-mail, surfing the Web, playing games, and other activities. But one thing is missing to make it the only device you need when going on a business trip: pen input. Pen input facilitates handwriting and drawing, which is important because our brain connectivity and the articulation in our arms, elbows, wrists, and fingers give us much greater motor skills when we use a tool. Everybody needs a pen, including children learning how to draw and write, engineers sketching a design, and business people annotating a contract.

Accordingly, the ultimate goal for tablet designers should be to replicate the pen-and-paper experience. Pen and paper work so well together: resolution is high, there is no lag between the pen movement and the depositing of ink, and you can comfortably rest your palm on the paper, with its pleasing texture.

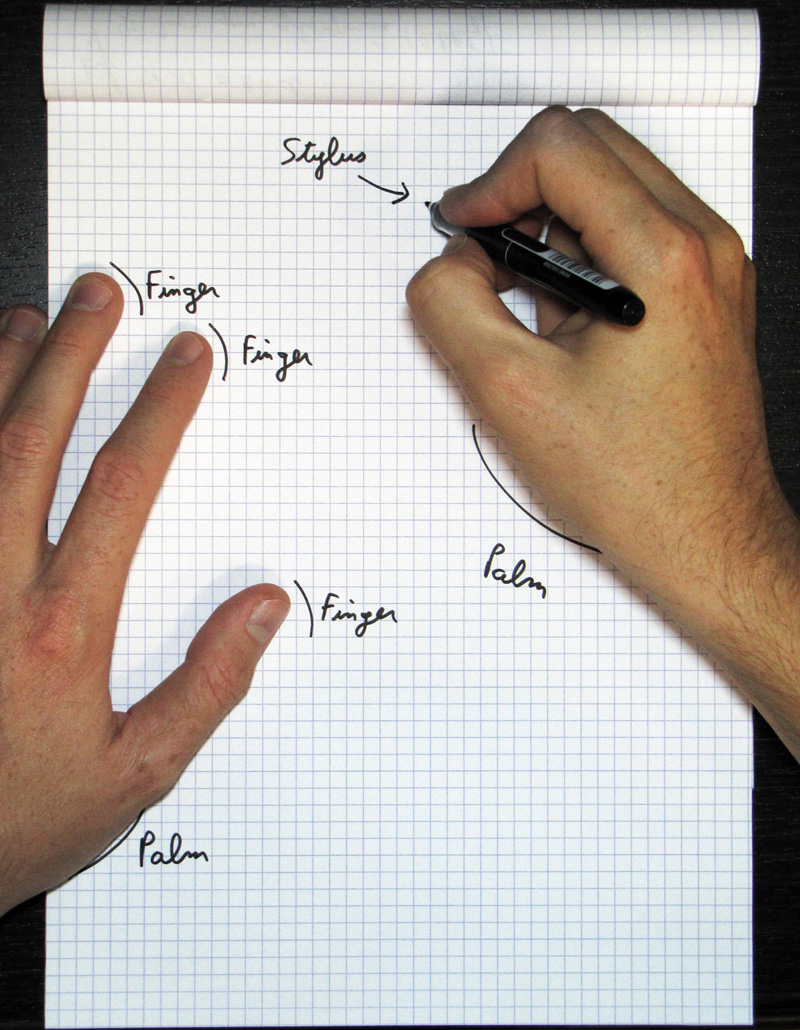

These qualities, however, are not easy to replicate in a tablet computer. You need high responsiveness, high resolution, good rendering, and palm rejection. Writing on a piece of glass with a plastic stylus is not an especially pleasing experience, so you also need coating on the glass and a shape and feel of a stylus that can approximate the pen and paper experience. Most importantly, you need an operating system and applications that have been designed from the ground up to integrate this input approach.

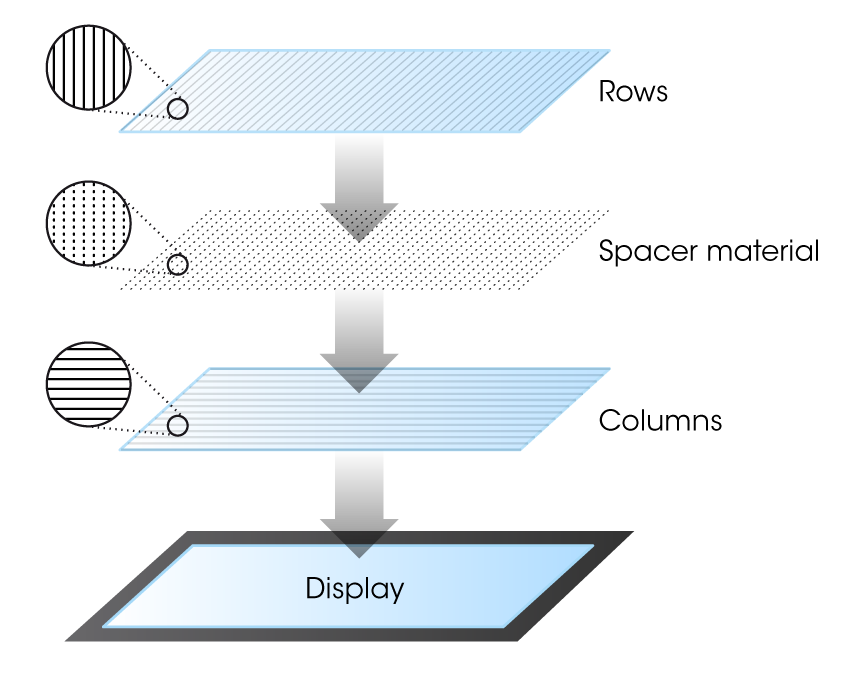

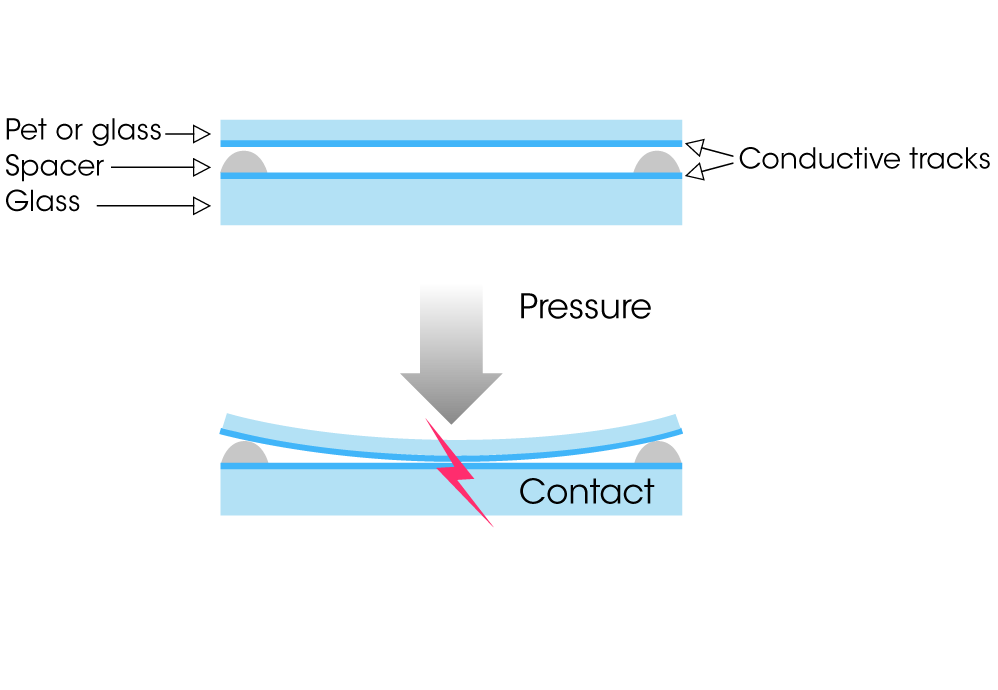

Interpolated voltage sensing matrix (iVSM) is a multi-touch technology that provides smart detection, including handwriting rendering and palm rejection. It can allow users to simultaneously move a pen or a stylus (and an unlimited number of fingers) on a screen. iVSM is similar to projected capacitive technology, as it employs conductive tracks patterned on two superimposed substrates (made of glass or hard plastic). When the user touches the sensor, the top layer slightly bends, enabling electrical contact between the two patterned substrates at the precise contact location (Figure 3). A controller chip scans the whole matrix to detect such contacts, and will track them to deliver cursors to the host. However, whereas capacitive technology relies on proximity sensing, iVSM is force activated, enabling it to work with a pen, a stylus, or any number of implements.

The tablet computer revolution is well underway around the world, with handwriting becoming an increasingly necessary function. Accordingly, device designers and vendors should take proper heed, or they might soon be seeing the handwriting on the wall.