Freescale Semiconductor has just launched a low cost chip that can be used to protect network-connected low power systems from unauthorized access to system-internal resources. Freescale’s target: a chip that can secure the growing number of network endpoints.

When it comes to e-books, images, music, TV episodes and movies, the authors’ and producer’s rights are protected by encryption. Encryption makes it impossible to easily and legally take on trips or vacations any literature or multimedia from the device to which these have been originally attached. Further, it makes it impossible to create important backups since optical, magnetic and flash memory media can lose part of their content more easily than books or film.

Priceless art in its different forms must be protected. If however we separate the unique talent and genius from the money and time invested, we find that DRM (Digital Rights Management) protects investments ranging from a few tens of thousands of dollars to the sometimes high cost of two-three hundred million dollars per movie (exception: the cost of Avatar was estimated at $500M).

Yet, nobody protects the brainchildren of system architects, software and hardware engineers and the investments and hard work that have produced the very systems that made feasible the art and the level of civilization we enjoy today. They are not protected by a DRM where “D” stands for “Designers.” Separating priceless engineering genius and talent from investment, we find similar sums of money invested in hardware and software aside from the value of sensitive data in all its forms that can be stolen from unprotected systems.

Freescale Semiconductor’s recent introduction is adding two new members to the QorIQ product family (QorIQ is pronounced ‘coreIQ’). They are the company’s Trust Architecture-equipped QorIQ P1010 and the less featured QorIQ P1014. The QorIQ P1010 is designed to protect factory equipment, digital video recorders, low cost SOHO routers, network-attached storage and other applications that would otherwise present vulnerable network endpoints to copiers of system HW and SW intellectual property, data thieves and malefactors.

It’s difficult to estimate the number of systems that have been penetrated analyzed and/or cloned by competitors or modified to offer easy access to data thieves, but some indirect losses that have been published can be used to understand the problem.

In its December 2008 issue, WIRED noted that according to the FBI, hackers and corrupt insiders have stolen since 2005 more than 140 million records from US banks and other companies, accounting each year for a loss of $67 billion. The loss was owed to several factors. In a publication dated January 19, 2006, c-net discusses the results of an FBI-research involving 2,066 US organizations out of which 1,324 have suffered losses over a 12-month period from computer-security problems. Respondents spent nearly $12 million to deal with virus-type incidents, $3.2 million on theft, $2.8 million on financial fraud, and $2.7 million on network intrusions. The last number represents mostly system end-user loss since it’s difficult to estimate the annual damage to the system and software companies that have created the equipment.

Freescale Semiconductor’s new chip offers a two-phase secure access to the internals of network-connected low cost systems. The first phase accepts passwords and checks the authorization of the requesting agent be it a person or machine. The second phase provides access to the system’s HW and SW internals if the correct passwords have been submitted.

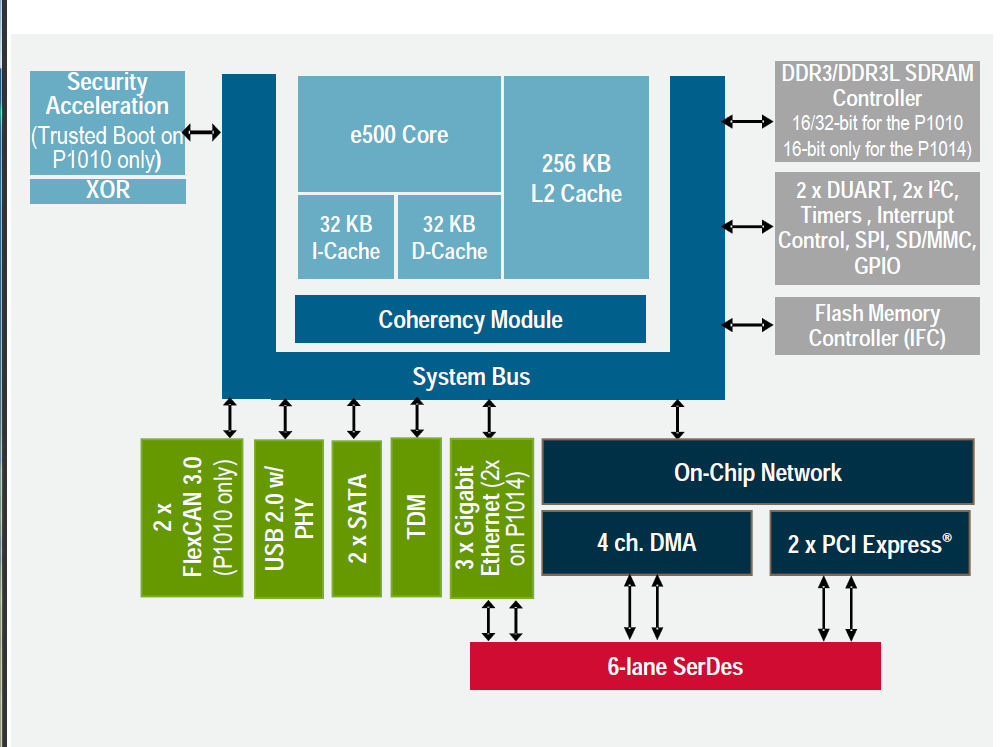

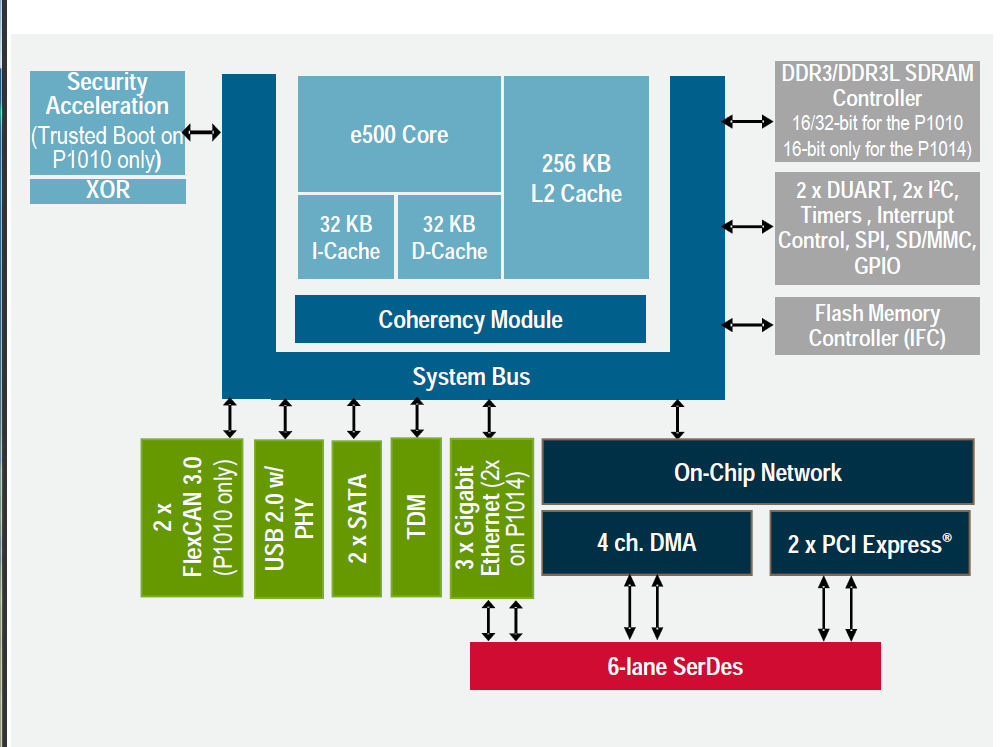

Fabricated in 45nm SOI, Freescale Semiconductor’s QorIQ P1010 shown in the Figure is configured around an e500 core — a next generation core that’s downward code-compatible with the e300 core that populates the company’s PowerQUICC II Pro communications processors. QorIQ P1010’s Power Architecture e500 core is designed to be clocked at up to 800MHz and is estimated by Freescale Semiconductor to consume in some applications less than 1.1W.

The chip’s single e500 core configuration follows the same concept employed in Freescale Semiconductor’s higher performance QorIQ chip family where protected operating system and applications are executed by multiple e500 cores at higher frequencies. The common configuration has the family’s processing cores and supporting cache levels surrounded by application-specific high bandwidth peripherals that include communication with the network, system-local resources and system-external peripherals.

External peripherals such as cameras will be encountered by the QorIQ P1010 in digital video recorders (DVR) accepting analog video streams from surveillance cameras. The DVRs may employ local storage for locally digitized and encoded video and/or make use of a network to access higher capacity storage and additional processing.

FlexCAN interfaces are the most recent on-chip application-specific peripherals encountered in the QorIQ P1010. The chips’ architects and marketing experts are probably responding to requests coming from potential customers building factory equipment. Aside from e500 cores and peripherals, the denominator common to most chips in the family is the little documented Trust Architecture.

An approximate idea of the Trust Architecture’s internals and potential can be gleaned from a variety of documents and presentations made by Freescale Semiconductor, from ARM’s Cortex-A series of cores employing a similar approach under that company’s brand named “TrustZone,” and from today’s microcontroller technologies used in the protection of smart cards.

The basic components of a secure system must defend the system whether it’s connected to the network or not, under power or turned off, monitored externally or probed for activity.

In the QorIQ P1010’s configuration of the first phase we should expect to find encrypt-decrypt accelerators used in secure communication with off-chip resources and on-chip resident ROM. The content of these resources should be inaccessible to any means that don’t have access to the decryption keys.

Off-chip resources could include system-internal such as SDRAM, Flash memory, optical drives and hard drives. Examples of system-external resources can be desktops, network attached storage, and local or remote servers.

The on-chip resident ROM probably contains the code-encrypted security monitor and boot sequence. A set of security fuses may be used to provide the necessary encryption-decryption keys. Different encryption-decryption keys defined for different systems would tend to limit damage made by malefactors but in case of failure could render encrypted data useless unless the same keys could be used in a duplicate system. Keys implemented in 256 bits or higher will make it very difficult in time and money to break into the system.

The block diagram of the QorIQ P1010 shows the peripherals incorporated on-chip but understandably offers less information about the Trust Architecture protecting the SoC. The QorIQ P1014 should cost less and should be easier to export since it lacks the Trust Architecture and shows a more modest set of peripherals. (Courtesy of Freescale Semiconductor)

Initial system bring-up, system debug and upgrade processes require access to chip internals. According to Freescale Semiconductor the chip’s customers will be provided with a choice among three modes of protected access via JTAG: open access until the customer locks it, access locking w/o notification after a period allowing access for debug, and JTAG delivered as permanently closed w/o access. Customers also have the option to access internals via the chip’s implementation of the Trust Architecture.

Tamper detection is one of the most important components in keeping a secure chip from unauthorized analysis. Smart cards detect tampering in various ways: they monitor voltage, die temperature, light, bus probing, and in some implementations, also employ a metal screen to protect the die from probing. We should assume that the system engineer can use the QorIQ P1010 to monitor external sensors through general purpose input/outputs protecting the system against tampering — to ensure that the system will behave as the intended by the original manufacturer.

The QorIQ P1010’s usefulness depends on the system configuration using it. A “uni-chip” system will be protected if it incorporates hardwired peripherals such as data converters and compressors, but it employs only the QorIQ P1010 for all its programmable processing functions. According to Freescale Semiconductor’s experts, a system’s data communications with other systems on the network will be protected if the other systems also employ the QorIQ P1010 or other members of the QorIQ chip family. Simple systems of this kind can use the QorIQ P1010 to protect valuable system software and stored data since except in proprietary custom designs the hardware may be easy to duplicate.

Note that systems employing the QorIQ P1010 plus additional programmable SoCs are more vulnerable.

Freescale has not yet announced the price of the QorIQ P1010. To gain market share the difference in cost of the QorIQ P1010 compared with a SoC lacking protection should be less than 2%-3% of the system cost–else competing equipment lacking protection will sell at lower prices. Freescale Semiconductor has introduced a ready-to-use chip that can become important to end users and system designers. Now all we need to see is pricing.