To enable intelligent adaptation for multiprocessing designs that perform system-wide load balancing, we implemented several functions and added these to the processors. Examples of these functions are constant resource and workload monitoring as well as bandwidth monitoring of the network link. This allows the device to promptly react to situations where (1) the workload exceeds the available resources, which can occur due to variations in the workload as well as (2) reductions of the available processing power, due to running down the batteries for example. In both cases, the processing of some tasks should be transferred to more powerful devices on the network.

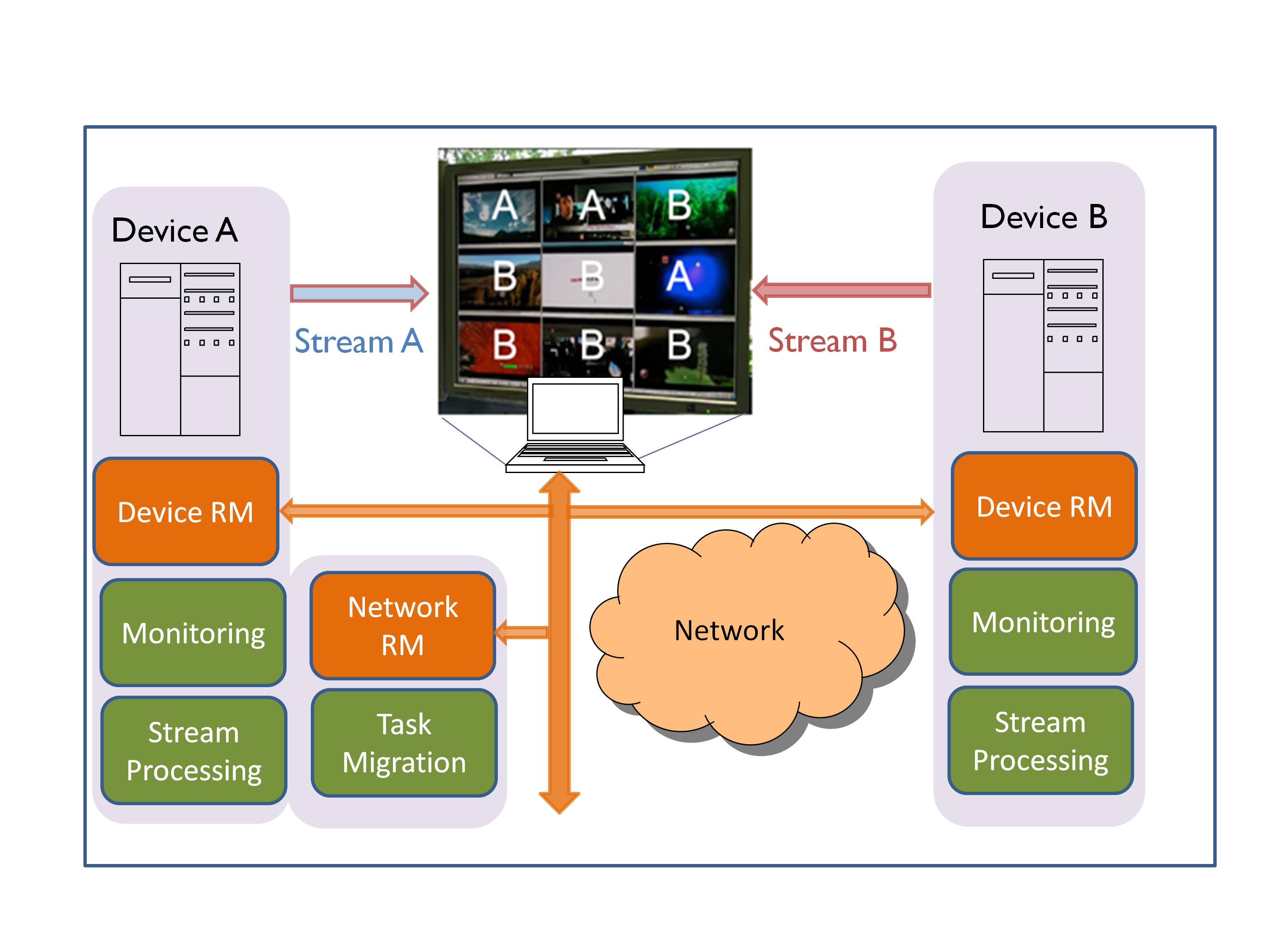

In our experiment, device A and device B both implement the decoding of video streams, followed by an image analysis function (logo-detector used for quality control) and re-encoding of the video streams (See Figure 2). Depending on the resource availability at either device, some stream processing tasks are transferred seamlessly to the less overloaded device. Finally, the output of all processed streams is sent to a display client. This task migration automatically lowers the workload at the overloaded device while all videos are visualized without any artifacts.

We implement a Device Runtime Manager (RM) at each device that takes care of the monitoring of workload, resources, video quality, and link bandwidth within each device.Note that bandwidth monitoring is required to guarantee that the processed data can be accommodated in the available bandwidth.

While the Device RM can take care of the load balancing within the device, a Network Runtime Manager is implemented to perform the load balancing between devices in the network. To do so, it receives the monitored information from each individual Device RM and decides where, on which device, to execute each task. This way, when resources at Device A are insufficient for the existing workload the Network RM shifts some stream processing tasks from Device A to B. Obviously, this task distribution between device A and B depends on the resource availability at both devices. In our experiment the screen on the client display shows in which device each displayed video stream is currently processed. In the example in Figure 2, due to lack of resources in device A, the processing of 6 video streams has been migrated to device B while the remaining 3 have been processed on device A.

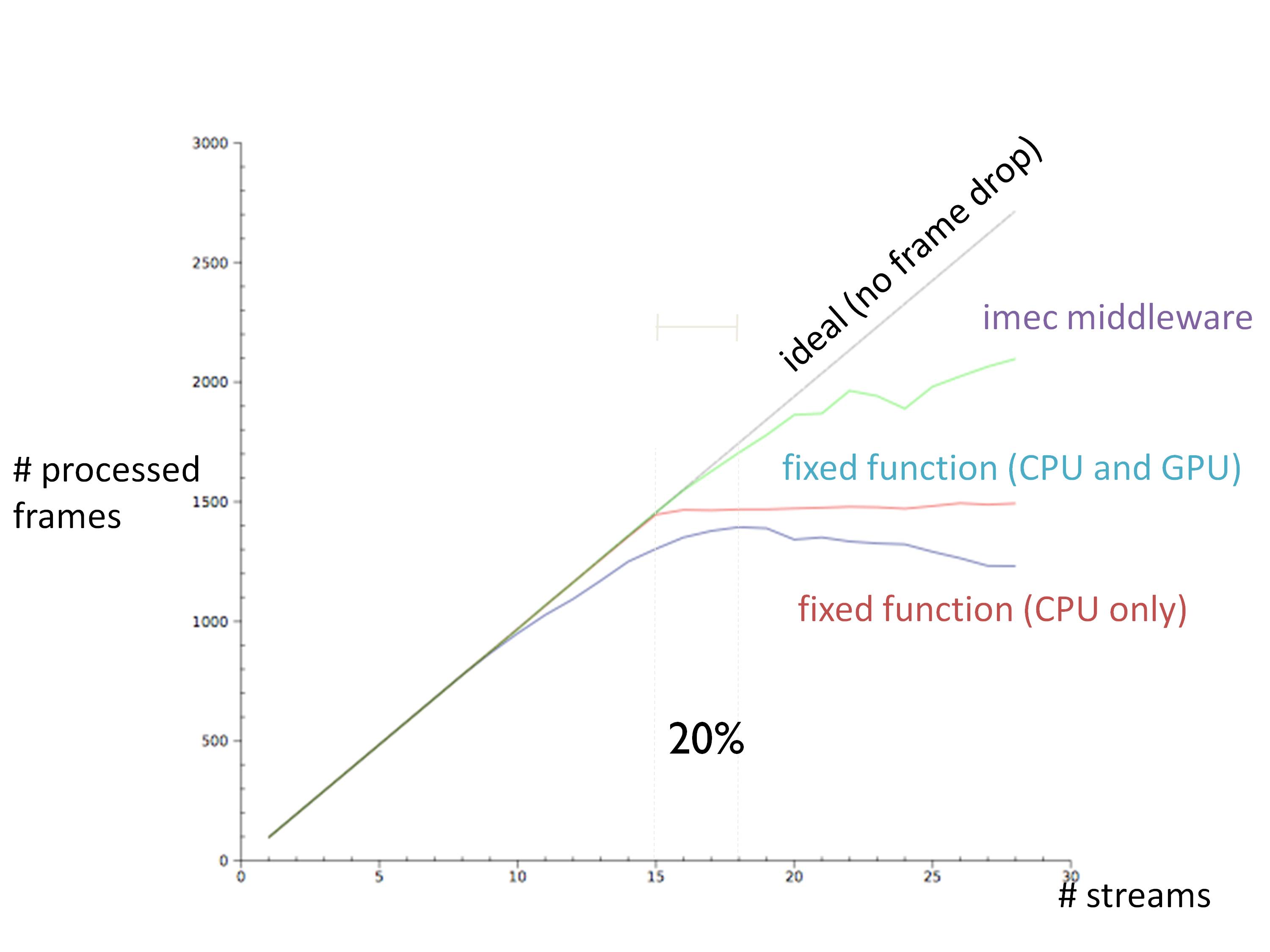

In a similar way, Figure 3 shows the monitored workload on both device A and B. Around time 65s, several stream processing tasks are added to device A, causing its overload. To decrease the load of device A the Network RM gradually shifts tasks from device A to device B resulting in the load distribution given from time 80s on. This way, load balancing between devices in the network overcomes the processing limitations of device A.

Another way to implement load balancing between devices would be by means of down-scaling the task requirements. For video decoding applications for example, this may translate into reducing the spatial or temporal resolution of the video stream. In another experimental setup, when the workload on a decoding client becomes too high, the server transcodes the video streams to a lower resolution before sending them to the client device. This naturally reduces the workload on the device client at the cost of an increased processing at the server.

Note that we chose to place the Network RM at device A, but it could be located at any other network device as the overhead involved is very low. Note also that in both experiments the decision to migrate tasks or adapt the contents is taken to fit the processing constraints and to guarantee the desired quality-of-service.

However, another motivation for load balancing can be to minimize the energy consumption at a specific device, extending its battery lifetime, or even controlling the processors’ temperature. Think of cloud computing data centers where cooling systems are critical and account for a big fraction of the energy consumed. Load balancing between servers could be used to control the processors temperature by switching on an off processors or switching tasks between them. This could potentially reduce the cooling effort and pave the way for greener and more efficient data centers.

We are facing a future where applications are becoming increasingly complex and demanding with a highly dynamic and unpredictable workload. To tackle these challenges we require high flexibility of adaptation and cooperation from devices in the network. Our research and experimentscontribute to an environment with intelligent and flexible devices that are able to optimally exploit and share their resources and those available in the network while adapting to system dynamics such as workload, resources and bandwidth.